Marshalling Geometry Into Houdini

For documentation on marshalling geometry into Houdini, see Marshalling Geometry Into Houdini.

Below is a code snippet that marshals in the simplest of geometry - a triangle - then proceeds attach some string attrbutes onto each point of the triangle, and finally dump the resulting scene into a hip file so it can be opened and viewed in Houdini.

#include <iostream>

#include <string>

#define ENSURE_SUCCESS( result ) \

if ( (result) != HAPI_RESULT_SUCCESS ) \

{ \

std::cout << "Failure at " << __FILE__ << ": " << __LINE__ << std::endl; \

std::cout << getLastError() << std::endl; \

exit( 1 ); \

}

#define ENSURE_COOK_SUCCESS( result ) \

if ( (result) != HAPI_RESULT_SUCCESS ) \

{ \

std::cout << "Failure at " << __FILE__ << ": " << __LINE__ << std::endl; \

std::cout << getLastCookError() << std::endl; \

exit( 1 ); \

}

static std::string getLastError();

static std::string getLastCookError();

int

main( int argc, char **argv )

{

bool bUseInProcess = false;

if(bUseInProcess)

{

}

else

{

serverOptions.timeoutMs = 3000.0f;

}

&cookOptions,

true,

-1,

nullptr,

nullptr,

nullptr,

nullptr,

nullptr ) );

ENSURE_SUCCESS(

HAPI_CookNode ( &session, newNode, &cookOptions ) );

int cookStatus;

do

{

}

ENSURE_SUCCESS( cookResult );

ENSURE_COOK_SUCCESS( cookStatus );

newNodePointInfo.

count = 3;

newNodePointInfo.

exists =

true;

float positions[ 9 ] = { 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 1.0f, 0.0f, 0.0f };

int vertices[ 3 ] = { 0, 1, 2 };

int face_counts [ 1 ] = { 3 };

char ** strs = new char * [ 3 ];

strs[ 0 ] = _strdup( "strPoint1 " );

strs[ 1 ] = _strdup( "strPoint2 " );

strs[ 2 ] = _strdup( "strPoint3 " );

newNodePointInfo.

count = 3;

newNodePointInfo.

exists =

true;

ENSURE_SUCCESS(

HAPI_AddAttribute( &session, newNode, 0,

"strData", &newNodePointInfo ) );

ENSURE_SUCCESS(

HAPI_SaveHIPFile( &session,

"examples/geometry_marshall.hip",

false ) );

return 0;

}

static std::string

getLastError()

{

int bufferLength;

&bufferLength );

char * buffer = new char[ bufferLength ];

std::string result( buffer );

delete [] buffer;

return result;

}

static std::string

getLastCookError()

{

int bufferLength;

&bufferLength );

char * buffer = new char[ bufferLength ];

std::string result( buffer );

delete[] buffer;

return result;

}

Marshalling Point Clouds

For documentation on marshalling point clouds into Houdini, see Marshalling Point Clouds.

The following sample showscases marshalling of a point cloud into Houdini Engine:

#include <iostream>

#include <string>

#define ENSURE_SUCCESS( result ) \

if ( (result) != HAPI_RESULT_SUCCESS ) \

{ \

std::cout << "Failure at " << __FILE__ << ": " << __LINE__ << std::endl; \

std::cout << getLastError() << std::endl; \

exit( 1 ); \

}

#define ENSURE_COOK_SUCCESS( result ) \

if ( (result) != HAPI_RESULT_SUCCESS ) \

{ \

std::cout << "Failure at " << __FILE__ << ": " << __LINE__ << std::endl; \

std::cout << getLastCookError() << std::endl; \

exit( 1 ); \

}

static std::string getLastError();

static std::string getLastCookError();

int

main( int argc, char **argv )

{

bool bUseInProcess = false;

if(bUseInProcess)

{

}

else

{

serverOptions.timeoutMs = 3000.0f;

}

&cookOptions,

true,

-1,

nullptr,

nullptr,

nullptr,

nullptr,

nullptr ) );

ENSURE_SUCCESS(

HAPI_CookNode ( &session, newNode, &cookOptions ) );

int cookStatus;

do

{

}

ENSURE_SUCCESS( cookResult );

ENSURE_COOK_SUCCESS( cookStatus );

newNodePointInfo.

count = 8;

newNodePointInfo.

exists =

true;

ENSURE_SUCCESS(

HAPI_AddAttribute( &session, sopNodeId, 0,

"P", &newNodePointInfo ) );

float positions[ 24 ] = { 0.0f, 0.0f, 0.0f,

1.0f, 0.0f, 0.0f,

1.0f, 0.0f, 1.0f,

0.0f, 0.0f, 1.0f,

0.0f, 1.0f, 0.0f,

1.0f, 1.0f, 0.0f,

1.0f, 1.0f, 1.0f,

0.0f, 1.0f, 1.0f};

ENSURE_SUCCESS(

HAPI_SaveHIPFile( &session,

"examples/geometry_point_cloud.hip",

false ) );

return 0;

}

static std::string

getLastError()

{

int bufferLength;

&bufferLength );

char * buffer = new char[ bufferLength ];

std::string result( buffer );

delete [] buffer;

return result;

}

static std::string

getLastCookError()

{

int bufferLength;

&bufferLength );

char * buffer = new char[ bufferLength ];

std::string result( buffer );

delete[] buffer;

return result;

}

Connecting Assets

For documentation on connecting assets, see Connecting Assets.

The sample below marshals a cube into Houdini Engine, then proceeds to connect that cube to the subdivde node in Houdini. Note that the subdivide node is a standard Houdini node, we did not need to first load its definition from file with HAPI_LoadAssetLibraryFromFile(). The result is then dumped to a file so it can be viewed in Houdini:

#include <iostream>

#include <string>

#define ENSURE_SUCCESS( result ) \

if ( (result) != HAPI_RESULT_SUCCESS ) \

{ \

std::cout << "Failure at " << __FILE__ << ": " << __LINE__ << std::endl; \

std::cout << getLastError() << std::endl; \

exit( 1 ); \

}

#define ENSURE_COOK_SUCCESS( result ) \

if ( (result) != HAPI_RESULT_SUCCESS ) \

{ \

std::cout << "Failure at " << __FILE__ << ": " << __LINE__ << std::endl; \

std::cout << getLastCookError() << std::endl; \

exit( 1 ); \

}

static std::string getLastError();

static std::string getLastCookError();

int

main( int argc, char **argv )

{

bool bUseInProcess = false;

if(bUseInProcess)

{

}

else

{

serverOptions.timeoutMs = 3000.0f;

}

&cookOptions,

true,

-1,

nullptr,

nullptr,

nullptr,

nullptr,

nullptr ) );

ENSURE_SUCCESS(

HAPI_CookNode ( &session, newNode, &cookOptions ) );

int cookStatus;

do

{

}

ENSURE_SUCCESS( cookResult );

ENSURE_COOK_SUCCESS( cookStatus );

newNodePointInfo.

count = 8;

newNodePointInfo.

exists =

true;

float positions[ 24 ] = { 0.0f, 0.0f, 0.0f,

0.0f, 0.0f, 1.0f,

0.0f, 1.0f, 0.0f,

0.0f, 1.0f, 1.0f,

1.0f, 0.0f, 0.0f,

1.0f, 0.0f, 1.0f,

1.0f, 1.0f, 0.0f,

1.0f, 1.0f, 1.0f };

int vertices[ 24 ] = { 0, 2, 6, 4,

2, 3, 7, 6,

2, 0, 1, 3,

1, 5, 7, 3,

5, 4, 6, 7,

0, 4, 5, 1 };

int face_counts [ 6 ] = { 4, 4, 4, 4, 4, 4 };

ENSURE_SUCCESS(

HAPI_CreateNode( &session, -1,

"Sop/subdivide",

"Cube Subdivider",

true, &subdivideNode ) );

ENSURE_SUCCESS(

HAPI_SaveHIPFile( &session,

"examples/connecting_assets.hip",

false ) );

return 0;

}

static std::string

getLastError()

{

int bufferLength;

&bufferLength );

char * buffer = new char[ bufferLength ];

std::string result( buffer );

delete [] buffer;

return result;

}

static std::string

getLastCookError()

{

int bufferLength;

&bufferLength );

char * buffer = new char[ bufferLength ];

std::string result( buffer );

delete[] buffer;

return result;

}

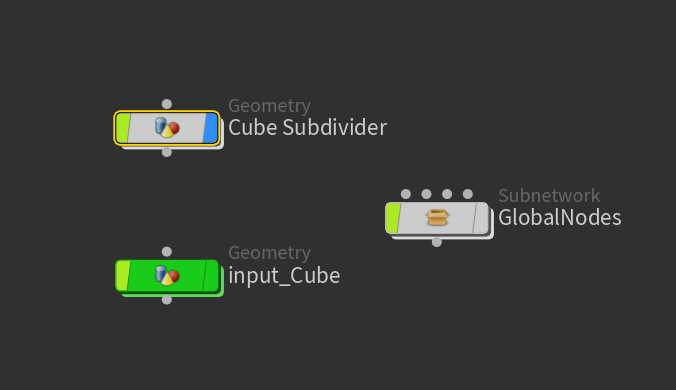

The result of the hip file is shown below. We see the input asset we created, as well as the subdivide asset. The "GlobalNodes" is something that HAPI creates automatically when a new Houdini Engine session is started:

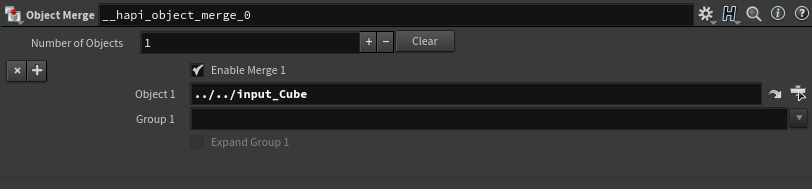

Diving into the subdivide asset, we see that a subdivide SOP node was created, but with an object merge node automatically created by HAPI feeding into it, with the path of the object merge being set to the Input node:

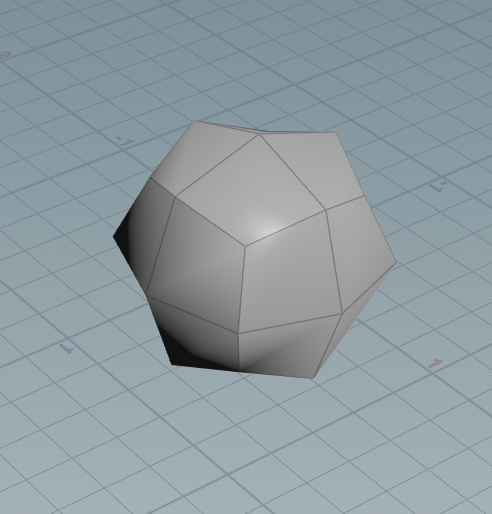

Finally, the result of the connected asset is seen (note that you may have to disable the display of the original input cube to see the subdivided cube):