|

HDK

|

|

HDK

|

Data flow in COPs is somewhat more complicated than in other nodes. It still follows the same recursive input cooking scheme as other nodes, but the implementation is quite a bit different. COPs is optimized for a multithreaded environment, so most of the cooking foundation reflects this.

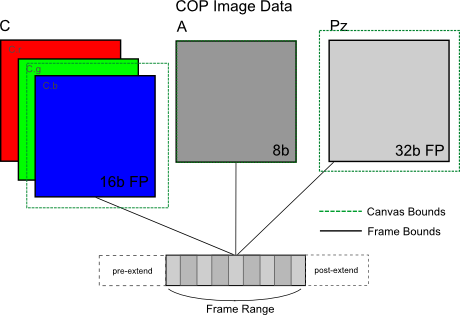

COPs process image data, which is divided up into frames, planes and components. A frame represents all the image data at a specific time. Planes divide the various types of image data into vectors and scalars, such as color, alpha, normals, depth and masks. Vector planes are further divided into components. Each plane may have its own data type, such as 8b or 32b floating point, which all its components share.

Each COP processes a sequence of images. A sequence has a fixed start and end frame, as well as a constant frame rate. All the images in the sequence share the same resolution and plane composition. These attributes cannot be animated.

A sequence can have extend conditions which describe how the sequence should behavior when cooked outside its frame range. These conditions are black, hold, hold for N frames, repeat and mirror. There is a special type of sequence called a "Still Image" which can be used for static background plates. It is a single image sequence that is available at any time.

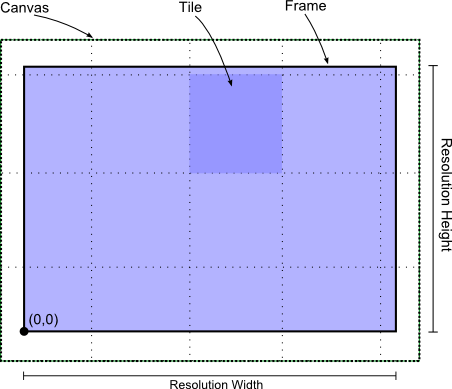

The resolution of the sequence determines the size of the frame bounds, which is always constant and cannot be animated. However, each plane's image has a canvas, which is where the image data actually exists. The canvas can be contained by, surround or be disjoint with the actual frame bounds. For example, a small garbage matte in the corner of an image may only have a small canvas which contains it, while blurring an image could increase its canvas beyond the frame bounds to accommodate the blur's falloff. The canvas can be a different size for different frames and for different planes. The bottom left corner of the frame bounds is always (0,0), and the canvas bounds are defined relative to that origin.

When writing images, the canvas outside the frame bounds is normally cropped away, though it can be optionally written to an image format that supports a data window, if the user desires.

The canvas is further divided into tiles for processing. By default, these tiles have a size of 200x200. Tiles at the top and right edges of the canvas may be partial tiles, if the canvas does not tile evening (which is normally the case).

COP nodes do not own image data - all tiles belong to a global COP cook cache. This allows for better memory optimization of large image sequences, and improves interactivity.

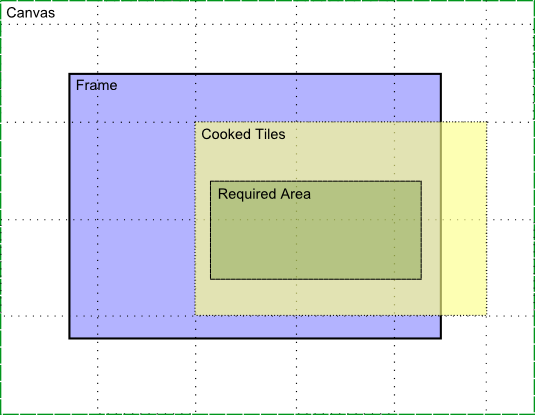

Unlike most other nodes which cook all the data they contain in one cook, COPs cooks only what is required to satisfy the required output image. So, if only color is required from a sequence that contains color, alpha and depth, only color will be cooked. This behavior also extends to areas of the image. The canvas can grow quite large after many transforms and filters have been applied, but if an operation only needs a subregion of that canvas (such as a crop or scale), only that subregion will be cooked.

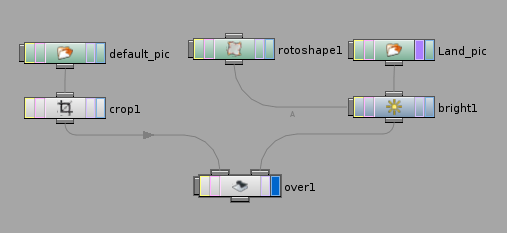

Compositing occurs in three distinct stages:

The first stage works like most other nodes; the inputs are opened and the sequence information is computed recursively until all data is up to date. The sequence information is stored in a TIL_Sequence which resides inside the COP2_Node (COP2_Node::mySequence). This phase works the same regardless of which output planes and area is requested. This occurs in the virtual method COP2_Node::cookSequenceInfo().

The second stage looks at the output image that is requested for the cook. It ensures that all canvas sizes are computed and builds all the required areas for each node. It then determines which required areas are currently cached and which areas need to be cooked. Each node being cooked is presented with the area required for the cook and asked to determine which areas it needs from its inputs. This occurs in the virtual method COP2_Node::getInputDependenciesForOutputArea(). The input image data may come from a different plane or frame.

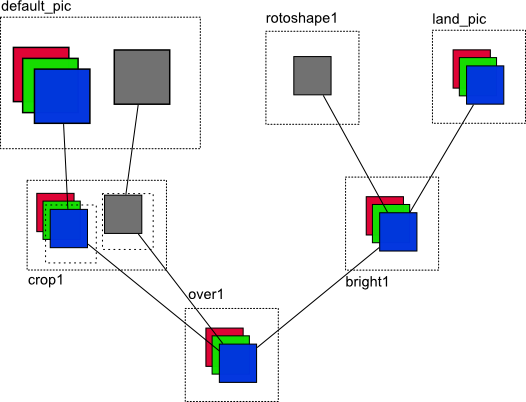

Once all inputs have been processed in the network, the dependency tree is then ordered and optimized. The dependency tree looks similar to the COP network structure, though with multiple entries per node (one per plane to be cooked).

The last phase is the multithreaded image computation phase. Several worker threads traverse the tree, pulling off batches of tiles which need to be cooked for various nodes. Threads can work on different nodes concurrently or work together on one node (or in some combination). This provides good throughput for networks with lots of file IO, while allowing for cooperative processing of expensive nodes.

Each thread processes a single batch of tiles (TIL_Tile) at once, called a TIL_TileList. The tile list contains one to four tiles from one plane (depending on the vector size of the TIL_Plane). The virtual method COP2_Node::cookMyTile() can request image data from its inputs by using COP2_Node::inputTile() (for a single tile) or COP2_Node::inputRegion() (for a larger area). The image data must be contained within the area that was reported earlier in the second stage.

Generally, you don't need to know how the scheduler is scheduling the cooking of nodes. For a single node, you only need to know what input image data you need to produce the output that is being requested. There are also hints that can be given to the scheduler, such as to avoid cooking the same node with more than one thread.

A GPU (video card) can also be used to process image data, though the principal is the same - one tile list is processed at a time on the GPU.

To get a raster from a COP, the cop needs to be opened successfully (like a file) and the sequence information should be queried as to the data available. Color and Alpha are always available, but other deep raster planes may also be present. Once a plane has been selected, COP2_Node::cookToRaster() can be called with an allocated TIL_Raster to cook the image. close() then needs to be called in order to cleanup.

An example of cooking an image:

In addition to grabbing the image as-is, you can also specify a data format for the TIL_Raster which differs from the native COP's format. The resolution can also be reduced or enlarged, and the image will be cooked to that size.