Adam Ferestad

Adam Ferestad

About Me

専門知識

Technical Director

業界:

Film/TV

Houdini Engine

Availability

Not Specified

Recent Forum Posts

Relative reference to file for HelpUrl on an HDA 2024年7月20日15:30

Hey all, I'm working on an HDA which utilizes an on-disk python library and I was hoping I could use the same directory structure to house my help docs so I could update them without having to rebuild the node every time I update the help docs. I could run it on our web server, but I would prefer to make it available offline and harden it against service outages on our web server. Unfortunately, I cannot seem to get it to work. When I click the help button, nothing happens. Anyone know if this is possible or do I just need to setup a web server for it. Also, I need it to be relative as users may have different install directory paths for it.

Note: I have had to switch my HOUDINI_EXTERNAL_HELP_BROWSER env variable to true as something is broken in my Houdini installs that causes an infinite loop when I try to use Houdini's built in browser for them. Not sure if that has something to do with it or not though.

Note: I have had to switch my HOUDINI_EXTERNAL_HELP_BROWSER env variable to true as something is broken in my Houdini installs that causes an infinite loop when I try to use Houdini's built in browser for them. Not sure if that has something to do with it or not though.

Update menu UI on other parm change 2024年6月26日15:42

I have a menu in an HDA that I am setting from a script that depends on some parms on the local and referenced nodes. When the referenced node updates, it seems to recook the state for the menu and the UI updates properly. But when I change the parm on the same node it does not update the UI. Calling

Here is the code I am using to generate the menu.

I have been trying to figure out if I can have something on

hou.Parm.rawValueon it returns the correct value, but you the displayed value is still the previous state. You have to click on the menu to get the correct values to show.Here is the code I am using to generate the menu.

hou.pwd().parm("engine")acts as an override for the target node behavior.rendergpu = hou.phm().check_rendergpu(hou.pwd().parm("target").evalAsNode()) if not hou.pwd().parm("engine").isDisabled(): rendergpu = hou.pwd().parm("engine").rawValue() == "xpu" cpugpu = filter(lambda x: x["gpu"] == rendergpu, hou.phm().retrieveData()) menuList = [] for item in cpugpu: menuList.append(item["id"]) menuList.append(item["name"]) return menuList

I have been trying to figure out if I can have something on

hou.pwd().parm("engine")as a callback that would force the menu to redraw, but no dice yet. how to calculate a diffusion from/over a point cloud? 2016年3月31日19:10

I have been looking on some math forums and posting for a couple of days and it occurred to me that the Houdini forums should have some people who know about this stuff too. I am currently working on my MA project at SCAD and I have elected to create a tool that will allow an artist to make a history dependent distribution of an attribute over a mesh. The process I have in mind for the tool working will go like this:

- 1.Artist gives the mesh with an initial state of attributes on it, be it from prim/point attributes of from a map they have painted.

2.I make sure all of the normals are calculated.

3.Scatter points densely on the mesh and transfer normals and the attribute we are diffusing.

4.Iterate over the points and measure a small neighborhood around each point to capture the point numbers for calculating over.

5.Perform a quadratic approximation of the attribute over the neighborhood <—- This is where I am right now

6.Calculate permeability attributes (not wanting to give away too much on this just yet)

7.Loop: 1. Gather permeability data, 2. Calculate the permeability of each point based on float ramps which represent timelines, 3. Advect attribute

8.Save the data to a map, which can then be used by the artist to control downstream aspects of the scene.

I am currently having some trouble with the basic diffusion step. I am trying to get a system working which will be able to advect the attribute over the point cloud before I worry about the permeability stuff. I have a good idea what I am going to do for all of that, but this part is throwing me for a loop. I am working with this general equation:

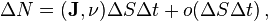

Considering that the little o should be virtually 0, at this point I am considering it negligable, ΔS will be the search radius for the neighborhood generation, Δt will be 1 for all intents at this point, since it is basically just an arbitrary step in the loop. Inside the inner product, v is defined in the documentation as a normal, so I am pretty sure that should be the surface normal, which I will capture from the original geometry. The other term in the inner product is the “Flux Vector” which is what I am currently trying to figure out how to calculate. The current formula I have is:

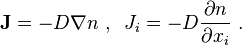

Utilizing the first equation, D should be a diffusion constant which controls the speed of diffusion (as I understand it), though someone on a math forum mentioned that it could be a tensor matrix, something to look into later when I get the basic working. The real issue for me is coming from the grad(n). Since I am looking at a point cloud, which is essentially 4 dimensional at the minimum, meaning A = n(x,y,z), I am trying to figure out how to manage the partial differential calculations around the point. That is where the concept of using the neighborhood to find a quadratic approximation came from. I know that one could easily calculate the partial differentials of the approximation, but getting that approximation is proving… interesting. I am relatively sure that the advection step should be fairly straight forward as A_(t+1) = A_t + ΔN*P(x,y,z).

I am not sure if I should be working in 4 dimensions, of if I should be transforming the neighborhood to the origin and projecting the points into a plane, then using the attribute as the output for a 2d mapping. Or is there an easier way to look at/solve the whole problem? I am not sure if this it the right forum to be asking this in either, but I figured that scripting was going to have the most people who might be familiar with the more theoretical aspects of how these things are produced. I am including the file I have been working in, so you can see the direction that I have been taking as well as what I have been attempting.