Spider-Man: Into the Spider-Verse has proved to be one of the most innovative animated features released in recent times. Directors Bob Persichetti, Peter Ramsey and Rodney Rothman tasked Sony Pictures Imageworks with using 3D animation combined with more of a 2D aesthetic to bring the story of the latest Spider-Man, Miles Morales, to life. That meant that Imageworks had to adapt - and even ‘break’ - its existing animation pipeline to deliver the film. In doing so, the studio still used its go-tools, including Houdini, to realise the computer-generated imagery. But it did so in several new ways, as key members of the team share with SideFX.

The trailer for Into the Spider-Verse.

Identifying CG solutions with a hand-drawn feel

With Into the Spider-Verse, the filmmakers were looking to directly reference comic book panels in telling their story. In some ways, this was the antitheses of much of what Imageworks had been crafting on previous shows, both in terms of animated films and in visual effects, which was photorealism or at least material photorealism. Here they needed to move away from that concept.

After our initial meeting with the production designer, Justin Thompson, it was clear he was seeking a new direction. Instead of realism, he strived for a highly designed comic book language. It was critical that our effects looked artistically designed to fit within the overall comic book aesthetic.

Pav Grochola | FX Supervisor“After our initial meeting with the production designer, Justin Thompson, it was clear he was seeking a new direction,” outlines Imageworks FX supervisor Pav Grochola. “Instead of realism, he strived for a highly designed comic book language. This had huge implications for us in the effects department. It was critical that our effects looked artistically designed to fit within the overall comic book aesthetic.”

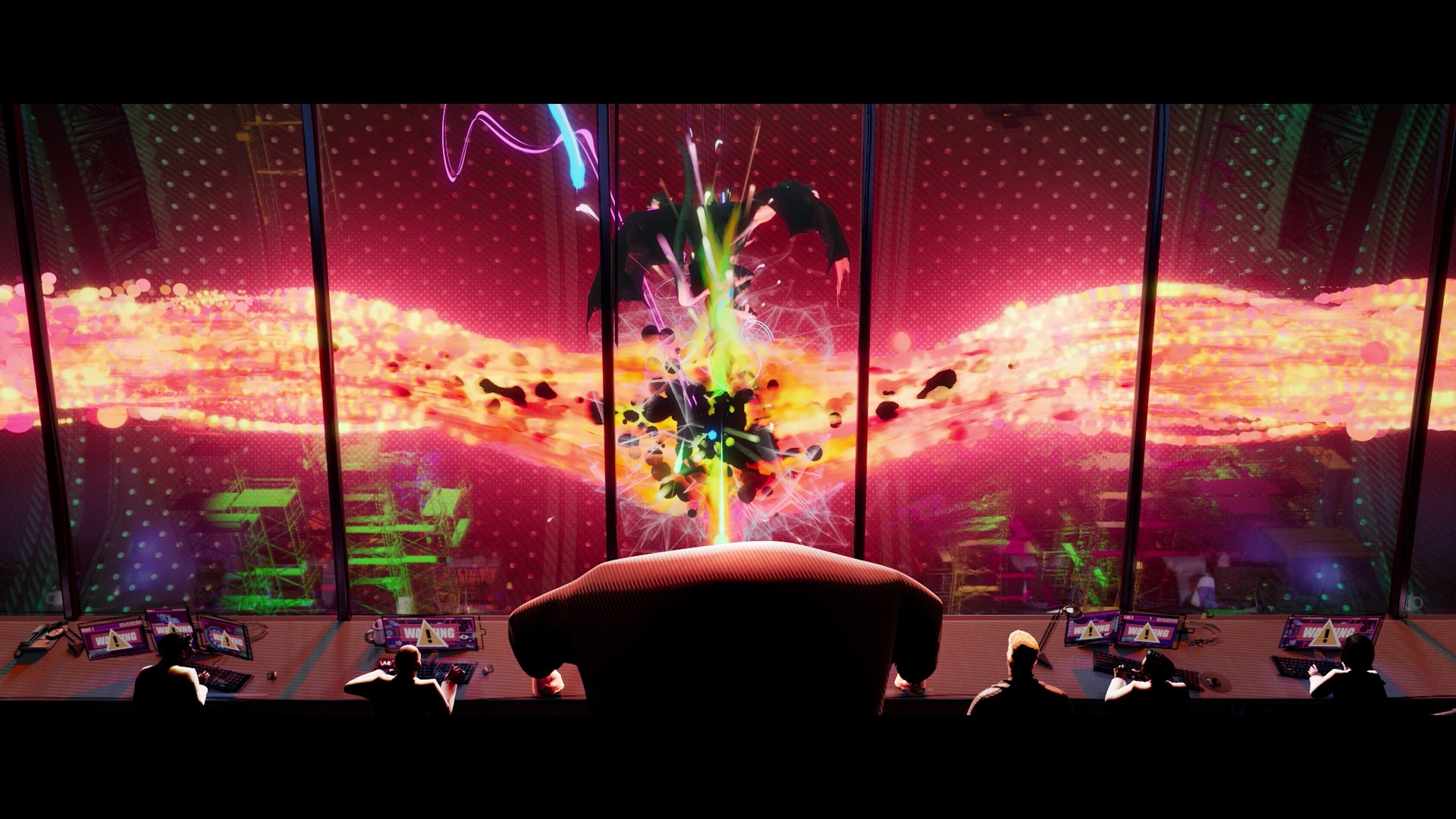

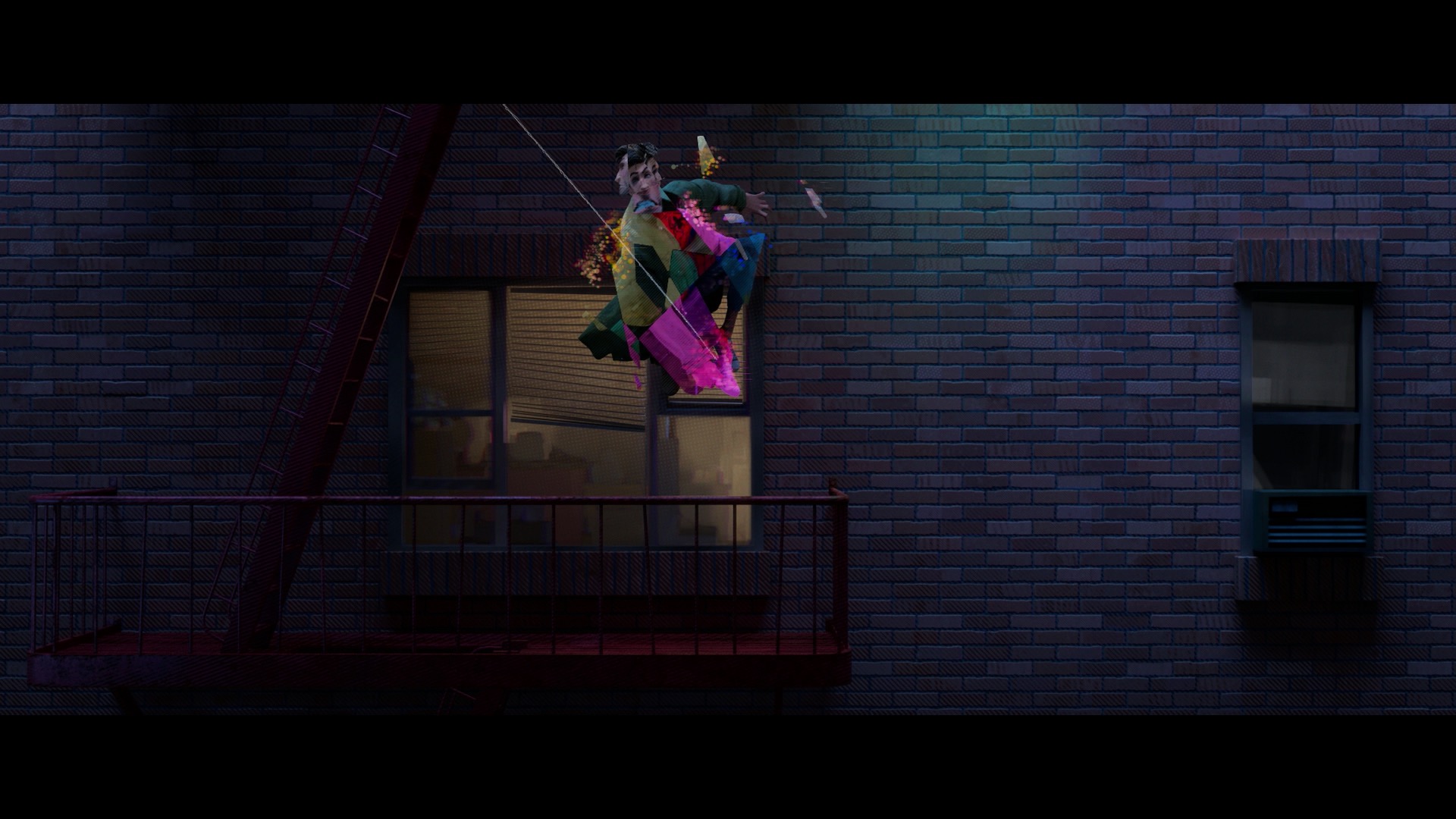

This frame from the film shows the mix of materials and effects that would typically make up a scene.

That did not mean effects simulations would not be used in the film; rather, notes Grochola, “it was clear we couldn’t count on simulation algorithms alone to be the design. Furthermore, photorealistic simulations simply wouldn’t fit the language of the film. Houdini gave us the freedom to easily manipulate our effects to ensure we nailed the design.”

“Houdini has a toolbox approach to problem solving,” adds Grochola. “If the tool doesn’t exist to solve a technical challenge, Houdini provides all the building blocks necessary to easily build that tool.”

For that reason, Imageworks look to craft unconventional effects still through Houdini, and Into the Spider-Verse certainly had highly unconventional ones. These included ‘glitching’ characters and buildings, a ‘multi-verse’ world, heavily stylised rigid body dynamics, custom sprite systems, 2D-inspired designs, and a need to highlight individual character ‘lines’ on 3D modelled characters.

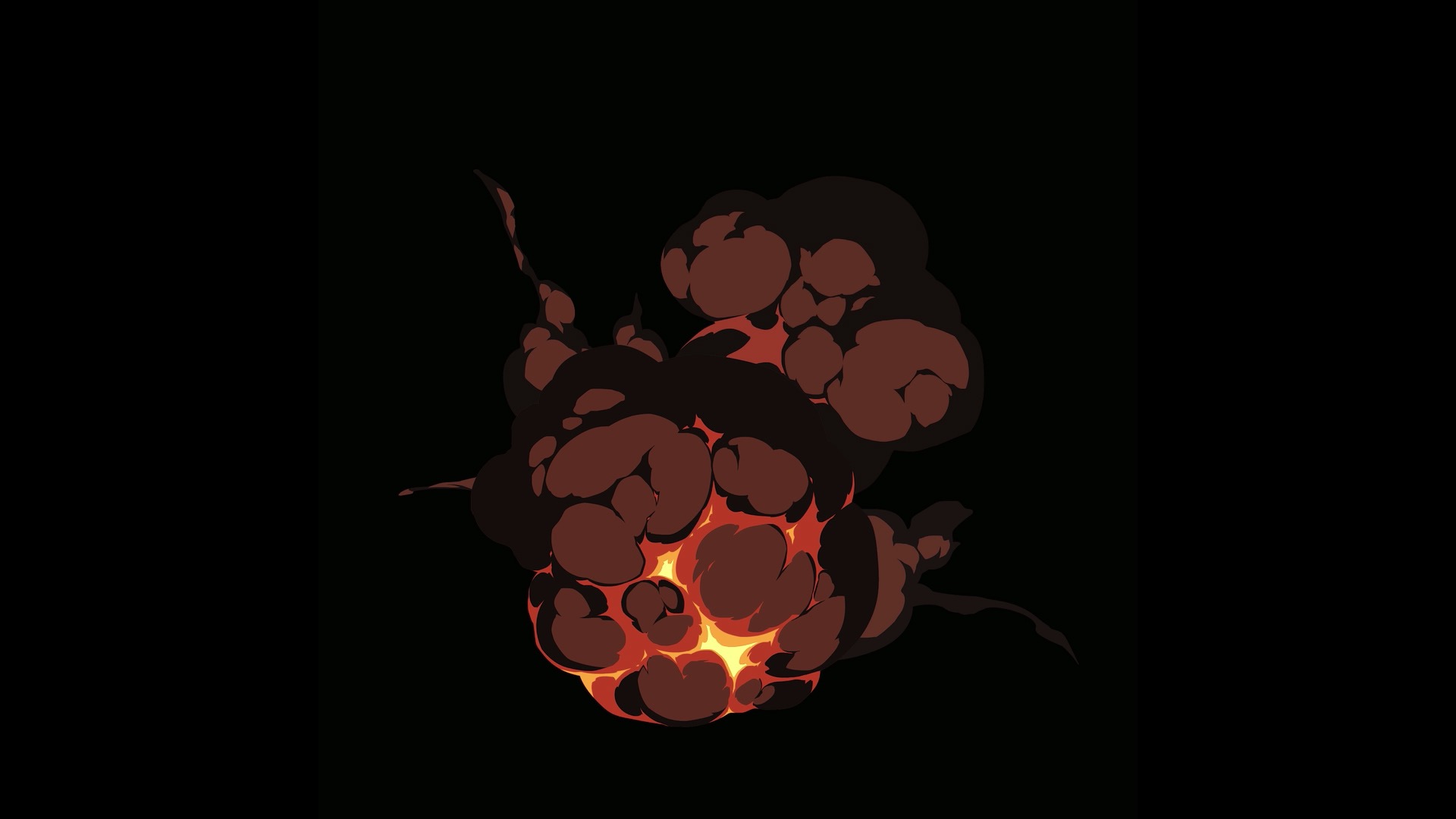

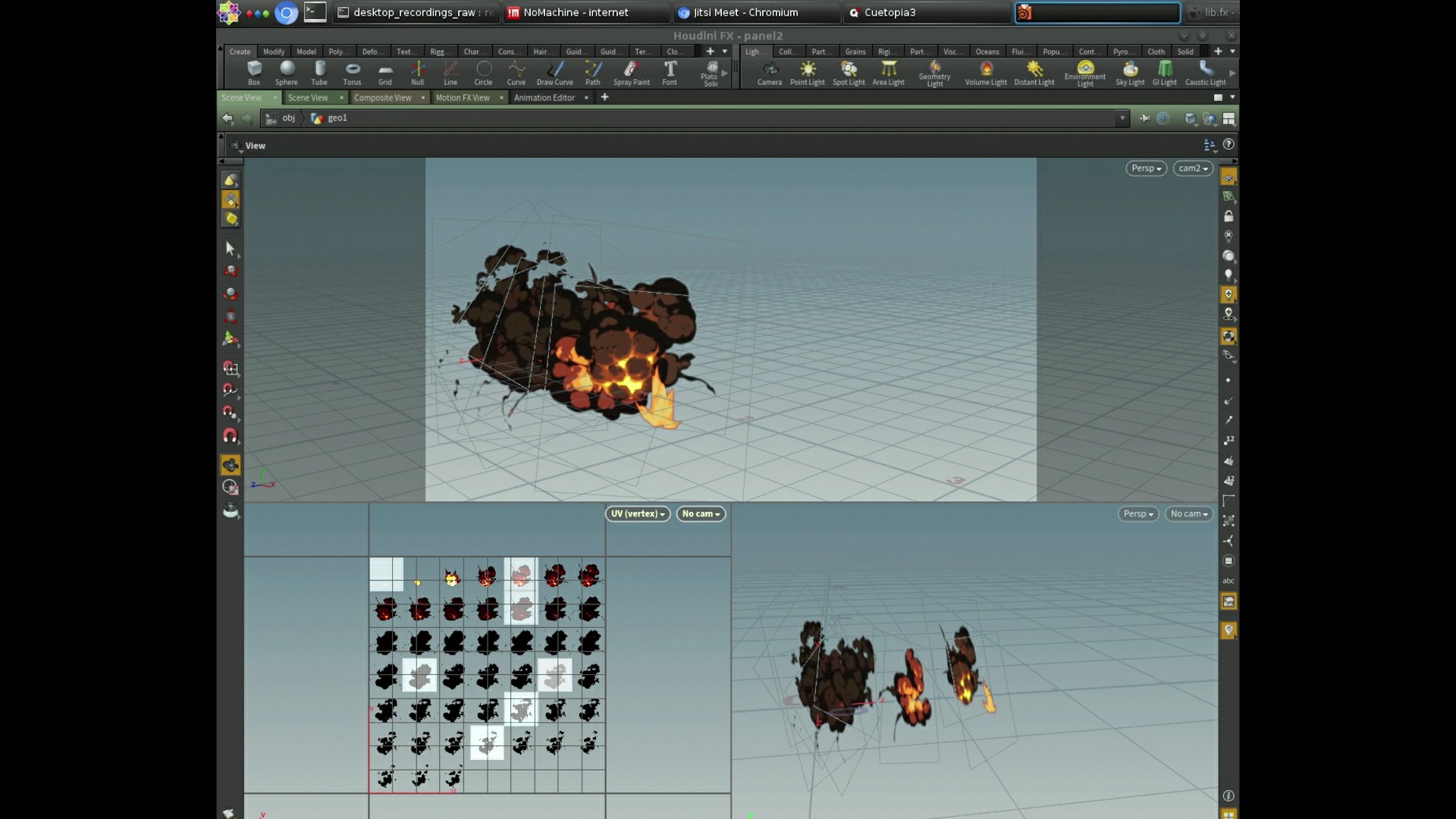

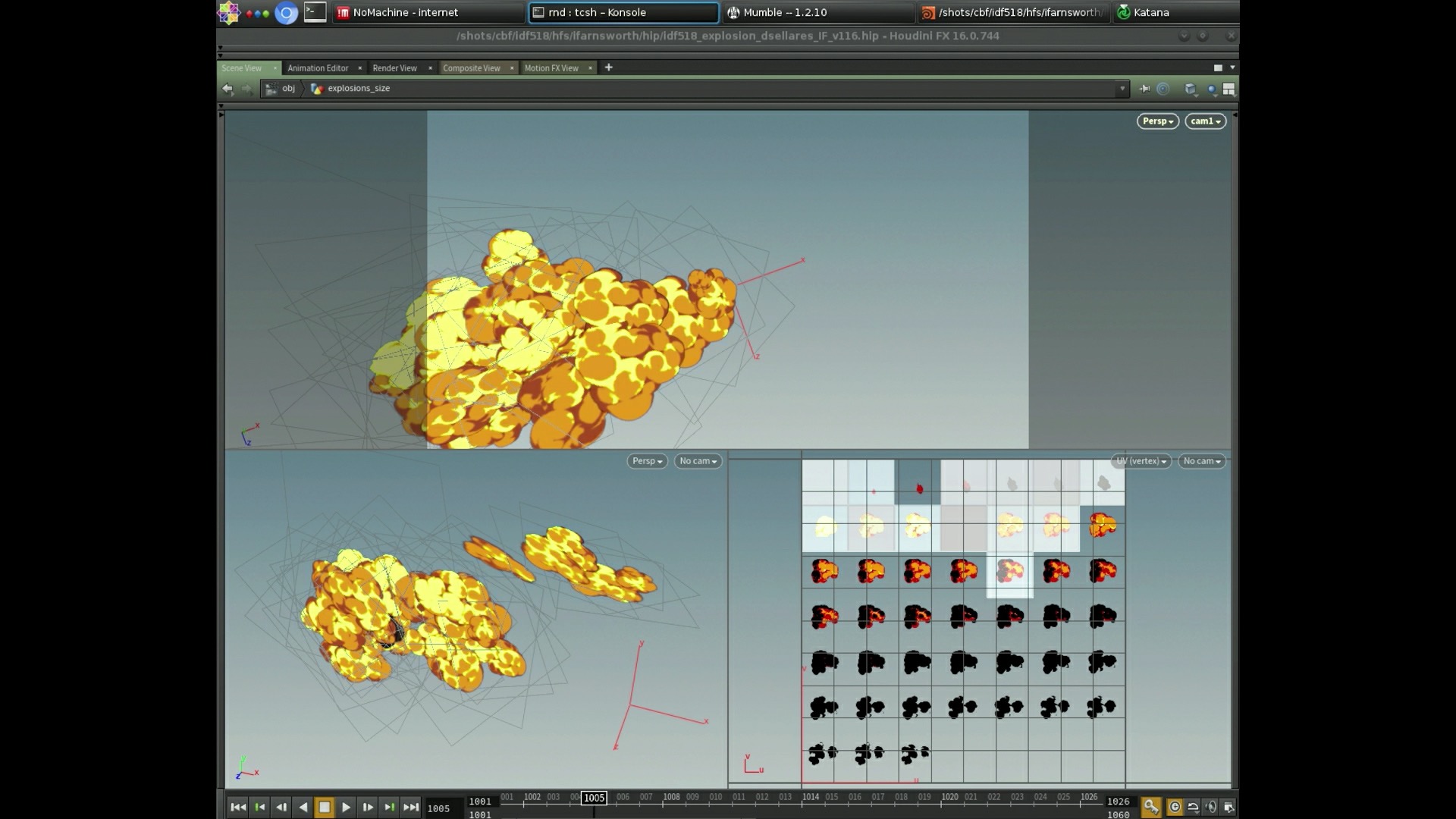

Explosions were a mix of 2D and 3D elements, but always with a comic book aesthetic.

Explosions and particle effects

There are several moments in the film that involve explosions or particle effects, all of which have this said mix of a 3D and 2D feel. Inspiration for this effects work came, says Imageworks FX supervisor Ian Farnsworth, from 2D hand-drawn anime. “We had initially tried doing some smoke or explosions using some simple particles and spheres, and then having them erode or evolve in interesting ways using solvers and shaders. While the results looked really cool, they never really hit the mark, and definitely never felt stylised or hand-drawn enough. They always had this element of ‘CG’ to them, and while we feel given enough time we could potentially push it a lot farther, we decided to look for a 2D FX artist to help us out.”

Hand-drawn shapes for an explosion.

That artist was Alex Redfish, whose Vimeo demos exemplified a style that would fit the desired language of the film. He provided Imageworks with a small re-usable library of 2D FX, starting with an explosion. “We had him provide as many layers as he could for us, so we could mix and match as needed, and also place things in depth for the 3D stereo side of things, too,” details Farnsworth. “He was able to provide us with a few explosion variations with multiple layers each, gun muzzle flash shapes, spark hit shapes, smoke, steam and flame elements.”

At Imageworks, an HDA in Houdini was created that would allow artists to select images from the library and then view the animation in real-time in the viewport. The same HDA also output a separate geometry for render. “Being able to view the animations in real-time was really important to us,” says Farnsworth, “so we created sprite sheets for all the animations we were going to use. This is a common technique used in games, where instead of loading a specific frame of animation for every card, you only have to load a single image that has all the frames on it already and you modulate the UVs to switch frames.”

The effects were composed in screen-space.

The explosions were often animated on 2’s - then shifted to 1’s - to enhance the desired look.

With that setup in place, the FX artists could use simple particle systems or hand-place these elements as needed, and focus on the timing and composition of the elements, without having to worry too much about rendering or the final look, since the render would match the viewport almost exactly. “The render side was nice and simple,” notes Farnsworth, “as we simply stored attributes for the hi-res image path, and simple things like age or alpha attributes so we could control a bit in the shader as well.”

“Using 2D animation as inspiration,” adds Farnsworth, “we also did some fun timing experiments on rigid bodies, outputting the geometry on 2’s, and sometimes switching from 1’s to 2’s or vice versa to get us a unique ‘stepped’ look that matched what the character animation team was doing. We used that in combination with some minor re-timing to really create a nice ‘punch’ and accentuate the action as desired. We also had to create a few custom boolean-based tools for creating stylised patterns and cuts. This is just simply not possible in another program other than Houdini.”

For destruction effects, a procedural modelling tool was used to generate stylised shapes, with Bullet and DMM used for dynamic simulations.

The film’s final battle sequence also utilised Houdini to fill up a volume of space with ‘Kirby dots’ - inspired by the Marvel artist Jack Kirby. Says Farnsworth: “These sequences were a big practice in composition and timing more than FX setups, however, Houdini made the actual process of iteration really fast. The setup used allowed the artists to create and place these ‘streams’ of particles, and then tweak and modify the internal motion / bubbling using just a few nodes. Once we had one shot nailed we could easily copy over some of the settings from that shot to other similar shots.”

The ‘Kirby dots’ in the climactic battle sequence.

Glitch effects

The development of a particle accelerator by the character Wilson Fisk opens up parallel universes (and brings in alternate versions of Spider-Man) into our universe. But it also causes almost catastrophic glitching on buildings and in several characters.

“The glitching effect splits the screen into cell patterns and assigned an alternative camera to each cell,” explains Imageworks FX lead Viktor Lundqvist. “One cell could be a zoomed in version of the character’s eyes, the next one could be the character’s face seen from a 90 degree angle shift. This created a fractured look of the character.”

“It was crucial to have a high level of control of how the characters were ‘pieced’ together,” continues Lundqvist. “We used Houdini to procedurally create both an array of ‘alternative’ cameras and cell pattern. This was used as an input to a custom HDA that automatically kicked off renders and gave a preview of the resulting look.”

It was crucial to have a high level of control of how the characters were ‘pieced’ together. We used Houdini to procedurally create both an array of ‘alternative’ cameras and cell pattern. This was used as an input to a custom HDA that automatically kicked off renders and gave a preview of the resulting look.

Viktor Lundqvist | FX lead

Buildings and structures begin to glitch as a parallel universe is opened.

It happens on characters, too, who have themselves come from these parallel universes.

Houdini was used to stage alternative camera angles and generate cell patterns of the glitching.

The glitching buildings were also bathed in colourful patterns.

Inklines

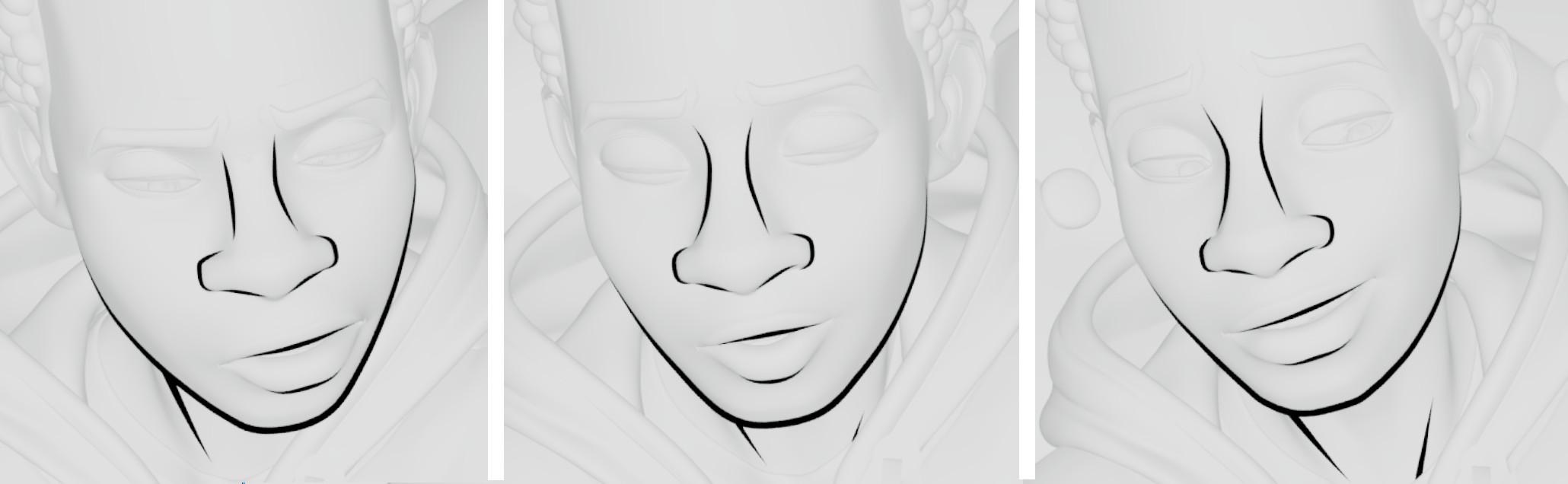

A key ingredient to the comic book look were line drawings on the characters, also known as ‘inklines.’ Early in the development process, Imageworks tried out a number of different solutions for creating inklines, but soon discovered that any approach that created inklines based on ‘rules’ - for example, toon shaders - didn’t look hand-drawn.

“The fundamental problem,” notes Grochola, “is that that artists simply do not draw based on these limited rule-sets. We also realized tracking hand drawings to character animation was not a trivial task. We couldn't just use texture maps because the drawings needed to adjust throughout the animation. We needed a way to take an artist’s drawing, stick it to underlying animation, while also having the control to adjust the drawings throughout the performance.”

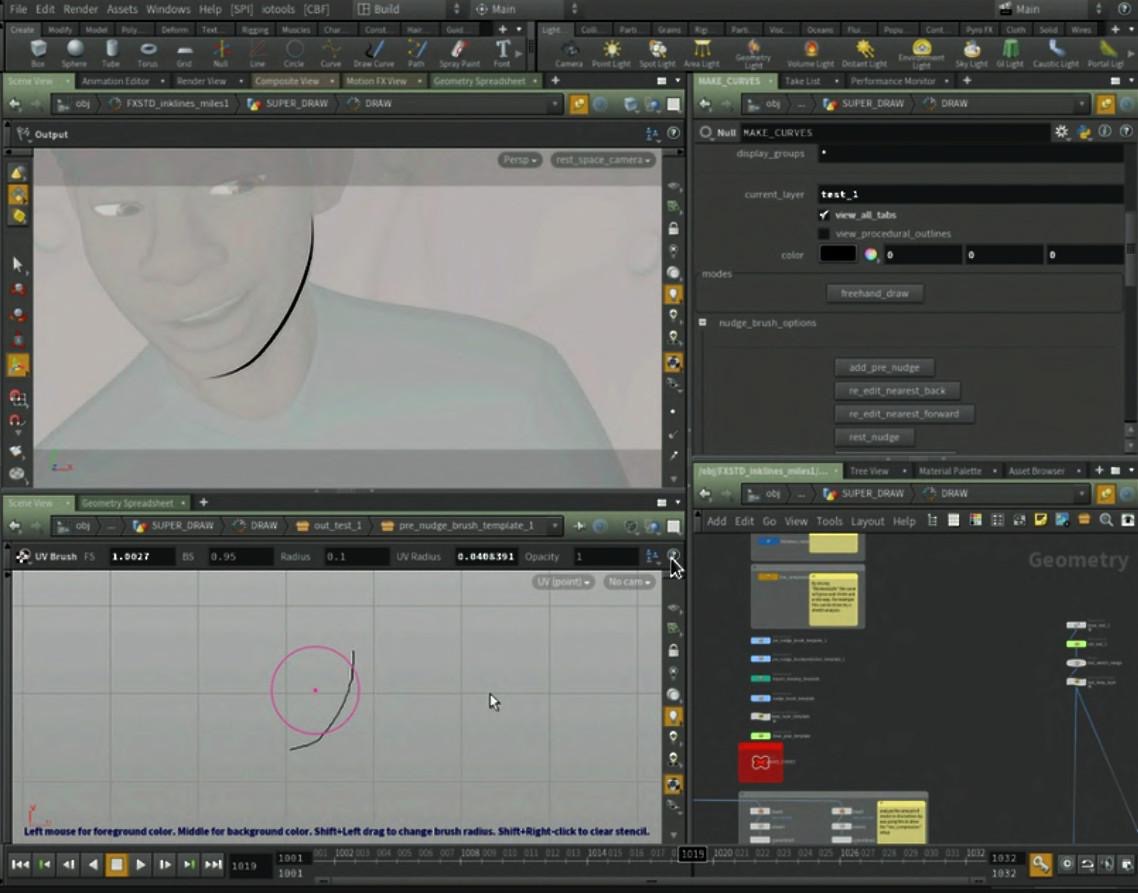

The solution came in the form of the procedural abilities of Houdini, along with its Python integration. Imageworks artists created a custom GUI in Houdini that executed Python scripts to create, destroy, reconnect nodes, change tools and ‘hop’ around the network automatically.

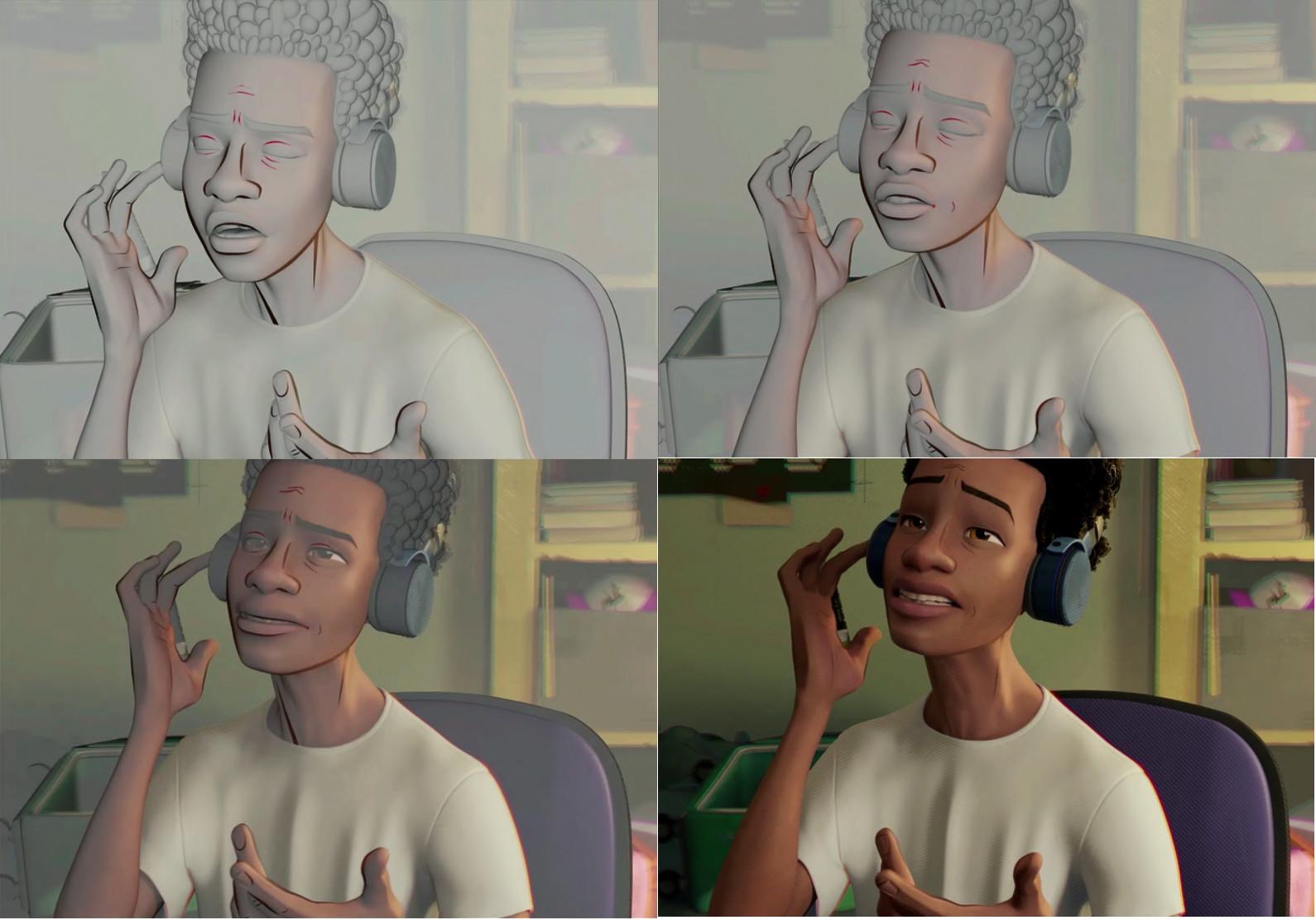

Inklines added to Miles’ CG face.

Example of inklines on Miles’ face. Note how the drawing adjust to the character’s animation.

“This freed up the artist to focus on the creative task of making great drawings, without worrying about the technical details of how the tool worked,” says Grochola. “The tool acted almost like a standalone application driving Houdini under the hood. Artists required very little traditional Houdini skills to use it.”

“We also used machine learning to ‘learn’ how to draw based on previous examples,” says Grochola. “This sped up our workflow because the artist would start a shot with machine learning prediction and adjust only where needed. Houdini is a perfect fit with machine learning because data manipulation is at the heart of both. Thanks to Houdini’s tight Python interaction, we ran Sklearn directly within Houdini. We trained learning models and called prediction all directly within the Houdini environment.”

Finally, inklines were exported as polygon lines from Houdini to Katana. Artists then picked lines to allow lighting to adjust the line thickness at render time.

We used machine learning to ‘learn’ how to draw based on previous examples. This sped up our workflow because the artist would start a shot with machine learning prediction and adjust only where needed. Houdini is a perfect fit with machine learning because data manipulation is at the heart of both.

Pav Grochola | FX Supervisor

Example of artist nudging drawings around in Houdini using the inkline tool.

Through the Multi-verse, webs, leaves, tentacles and more

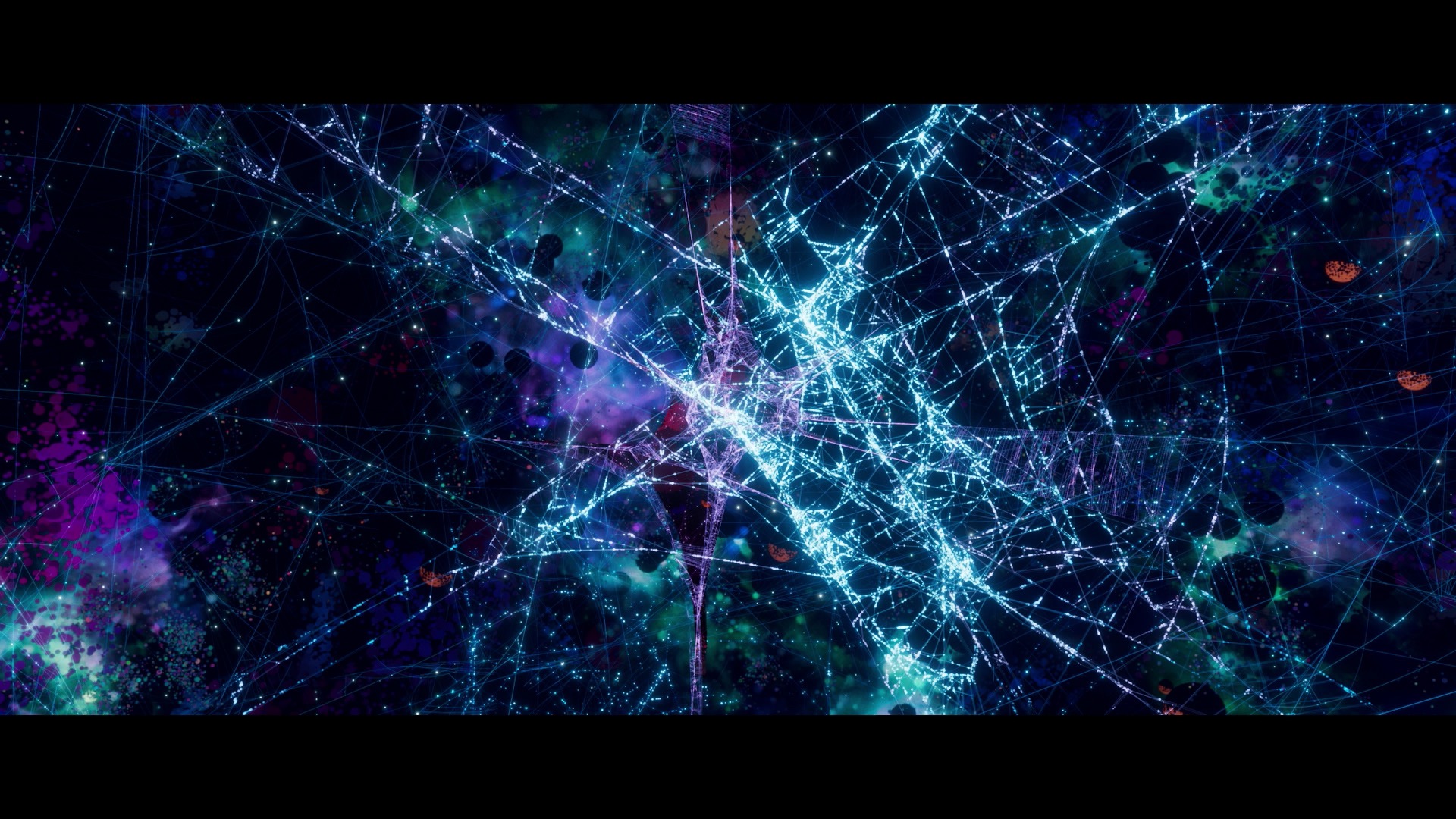

When the Spider people travel to our universe, they go through the ‘Multi-verse’. After exploring a number of ideas for how this should look, Imageworks landed on a highly detailed environment consisting of procedurally generated web-like structures, mixed with many points and volumetrics - crafted in Houdini.

Going through the Multi-verse.

Of course, Spider-Man would be nothing without his signature webs. Houdini was relied upon again here, especially for its procedural nature, as Farnsworth explains. “Using one setup as the base, we were able to easily create unique web variations for some of the different Spider-Man characters as well as the webs in the Spider-Man hideout and the webs wrapped around Miles during one sequence. Mile’s and Peter’s web was very thin/fine and had a few extra spiral webs around the main core, with a graphic almost 2D looking ‘eiffel'. We built on that and modified it for Gwen’s web. Her web was much more ribbon-like and graceful looking just by tweaking a few parameters. The hideout and ‘wrapped up’ webs were packed with more small webs along with ‘knots’ randomly added into the structure.”

To get the webs into shots, the animators used a ‘curve’ rig that would then go through CFX. That department had a web rig for simulating secondary motion and also added that ‘eiffel’ end-piece. “Having this in their court was ideal,” says Farnsworth, “because of web-cloth interaction. When CFX was done, the webs would go through the procedural setup in Houdini and, depending on the variant they wanted, it would add spiral webs around the main core, and completely replace the eiffel with a more stylised and customizable one.”

Houdini allowed us to iterate fast and go through many variations with some minor parameter tweaks, and really allowed us this painterly abstract leaf placement that would not have been easy to get otherwise.

Ian Farnsworth | FX Supervisor

Webs were crafted in Houdini based on a single original setup.

For a forest scene, Houdini was used by Imageworks to help create leaves. Like the rest of the film, the trees in the forest appeared in stylised form. “Having somebody hand place thousands of leaves just to hit the look wouldn’t be very efficient,” suggests Farnsworth. “The artwork we got had leaves floating on their own as if an artist just used a loose brush stroke to add them. We ended up turning to Houdini to scatter, place, and colour all the leaves for the trees. Houdini allowed us to iterate fast and go through many variations with some minor parameter tweaks, and really allowed us this painterly abstract leaf placement that would not have been easy to get otherwise.”

Houdini allowed artists to scatter, place and colour leaves.

Manga-inspired streaks provided suggested movement to the characters.

Tentacles on the character Doctor Octopus were another thing that Houdini was used for, in particular to generate extra wires and geometry inside of them. “The need for them came late in the game, and so instead of going back through the whole modelling, rigging and animation pipeline,” says Farnsworth, “we used Houdini to generate all of the extra geometry procedurally in the shot level, saving us a lot of time. This setup was eventually automated to the point where we could just run it anytime there was an animation publish, and it would create the geo, publish the geo, and submit renders for you.”

Additional uses of Houdini included for procedurally generating snow mounds at the bases of tombstones and trees, and on other vehicles or buildings. Plus, the software helped generate motion blurred streak effects, this time referencing such effects done in traditional 2D manga animation. “We also used a similar technique on the snow streaks when Miles and Peter are being dragged through the city by the train,” adds Farnsworth. “The stylised look of the snow was done with Houdini, not a result of real motion blur. These effects gave the scene more punch and also helped reinforce the comic-book style of the film.”

COMMENTS

Olaf Finkbeiner 5 years, 10 months ago |

and even the Story of this movie is cool. If you have not seen it, do it now.

It blew me away! Can't wait for the BlueRay to be released...

JohnDraisey 5 years, 10 months ago |

Goddamn crazy, that's what the Imageworks team is. I love this article and the cool tricks they came up with in Houdini and Arnold. =]

DanTrip 5 years, 10 months ago |

One of the best movies of the year not just for the VFX and art direction, but story too. Going to check for BlueRay pre order now!

nicholasralabate 5 years, 9 months ago |

it is a shame this wasn't nominated for best picture, it was like injecting neon-coated optimism into your heart

FrankJ99 5 years, 9 months ago |

In the caption of one of the images it says DMM was used for the dynamics. I wish there was more detail about that!

pgrochola 5 years, 9 months ago |

FrankJ99 - We have proprietary plugins in Houdini that allows us to sim with DMM. We did use DMM in some shots - when Doc Ock throws Peter against the tables in the lab and some shots of the Prowler busting up Aunt Mays House. The majority of RBD work was simed using standard bullet in Houdini though!

Please log in to leave a comment.