Small update: I have an almost working “FBX-rig-weight-import” set up. I get some random crashes with that, which seem to be related to unpacking the weights “hardcoded” into the bone-capture pipeline that the FBX import sets up and it sometimes doesn't deal correctly with point weights shared across several joints. But … it looks promising as a general “FBX rig import” that basically converts joint-based rigs to bones-based rigs.

Once I have this (separate) HDA working more or less reliably, I'll make it available in one form or another.

Marc

Found 590 posts.

Search results Show results as topic list.

3rd Party » WIP: FBX HD & SD importer, Joints to Bones Converter and Morph-Helper (was: DAZ to Houdini converter)

-

- malbrecht

- 806 posts

- Offline

Houdini Indie and Apprentice » Select primitives on the outside of the mesh

-

- malbrecht

- 806 posts

- Offline

I think those internal faces aren't considered “internal” by the boolean tool because a ray cast offset from their normal would count an even number of crossings. To be considered “internal”, an odd count would be required.

You may have to write your own “visual boolean” tool or maybe use a wrapping technique *1 (even if that might smoothen out creases).

Marc

—

*1 e.g. the shrinkwrap SOP

You may have to write your own “visual boolean” tool or maybe use a wrapping technique *1 (even if that might smoothen out creases).

Marc

—

*1 e.g. the shrinkwrap SOP

Edited by malbrecht - March 19, 2020 11:10:49

Houdini Lounge » arnold or redshift? h18

-

- malbrecht

- 806 posts

- Offline

Mr. Dunlap, I do not appreciate the tone of your comment.

I tried to keep it civilized and merely report my personal experience, which I expressively marked as subjective and emotional. If what I wrote is a “rant” in your eyes, I'd be curious what your comment is supposed to be.

BTW I do not see any “abandonment” of Mantra, you clearly know more than I do, why don't you share your knowledge?

For your convenience, I am out of the discussion - if anyone wants to know more or discuss things, I am available by direct mail. The way you “communicate” is not my way, this religious over-the-top approach I don't want to deal with.

Marc Albrecht

I tried to keep it civilized and merely report my personal experience, which I expressively marked as subjective and emotional. If what I wrote is a “rant” in your eyes, I'd be curious what your comment is supposed to be.

BTW I do not see any “abandonment” of Mantra, you clearly know more than I do, why don't you share your knowledge?

For your convenience, I am out of the discussion - if anyone wants to know more or discuss things, I am available by direct mail. The way you “communicate” is not my way, this religious over-the-top approach I don't want to deal with.

Marc Albrecht

Houdini Lounge » arnold or redshift? h18

-

- malbrecht

- 806 posts

- Offline

For clarification: My gripe is about deleting unhappy customers' feedback, not about delays in development (I know about TWO deleted posts by different users, so how many else have been deleted?).

By deleting feedback from a forum a company can shape public impression of how happy customers are with their product - that is something I have never felt comfortable with.

I am almost happy with Redshift's technical features.

Marc Albrecht

By deleting feedback from a forum a company can shape public impression of how happy customers are with their product - that is something I have never felt comfortable with.

I am almost happy with Redshift's technical features.

Marc Albrecht

Edited by malbrecht - March 15, 2020 11:02:11

Houdini Lounge » arnold or redshift? h18

-

- malbrecht

- 806 posts

- Offline

> dammit. i just bought redshift. lol

I wonder why you asked for oppinions.

Marc Albrecht

I wonder why you asked for oppinions.

Marc Albrecht

Houdini Lounge » arnold or redshift? h18

-

- malbrecht

- 806 posts

- Offline

I can - currently - only give a highly emotional, probably unfair recommendation: Don't go with Redshift.

Recently I “upgraded” my license and I am now convinced that this was a - although maybe not overly expensive - nonsensical mistake. For once, with large scenes (heavy geo, lots of objects), Mantra isn't that much slower for me (yes, it does run slower to get first “usable results”, but running a full render in a couple of scenes I did with some experimental data definitely wasn't faster in RS).

My biggest gripe is this: With RS you are nailed to a “production release” version of Houdini quite often. I am experiencing a lot of random crashes with Houdini 18.348, that I do not blame on H (SideFX provides daily builds and fixes issues pretty quickly). Yet, RS has been “out of sync” for a few weeks, lagging behind, so I cannot update to the latest production release and I cannot update to a daily build if I want to use Redshift.

I fully realize that SideFX is changing their API rapidly with Houdini 18 and I cannot EXPECT Redshift to follow up on a “daily” base (or, maybe, even weekly). Yet, I do kind of paid for “frequent updates” and I have expressed my slight feeling of being unsettled in the Redshift forum. My comment - along with others, who have also been polite about it, was simply deleted, censored out, washed away.

Also, I filed a bug report with SideFX about a crash in a Lab tool happening with Redshift. They took care of that quickly, as usual, but pointed out that there needs to be a minor fix on Redshift's side as well. SideFX and Redshift don't seem to talk directly to one another, I was asked, kindly, to report the issue to Redshift's development team. They don't have a “bug database”, you have to publish a bug report openly in their forum (which I find highly unusual for a professional tool, but that - again - is just me). They were absolutely reluctand to talk to SideFX about the issue, even though I gave them the bug report ID on SideFX' side. So I had to negotiate the “bug fixing” between two companies, both of which get money from me to continuously update their respective tools to work together. I don't like that. To be fair, Redshift fixed their side of the problem extremely quickly as well. Unfortunately, I cannot use their fix, because they still haven't released the new plugin version that holds that bug fix.

At the end of the day, right now it seems like the two companies are holding some kind of grudge against one another and I simply don't want to be the moderator of that, so next time an update to a license to one of the two tools is immanent, the company that provides constant, frequent updates gets my money. The other does not.

Again: This is a personal, subjective, probably unfair but still honest response. I would never have posted in this form if Redshift hadn't deleted my expression of unease on their forum. They lost my trust.

Marc Albrecht

Recently I “upgraded” my license and I am now convinced that this was a - although maybe not overly expensive - nonsensical mistake. For once, with large scenes (heavy geo, lots of objects), Mantra isn't that much slower for me (yes, it does run slower to get first “usable results”, but running a full render in a couple of scenes I did with some experimental data definitely wasn't faster in RS).

My biggest gripe is this: With RS you are nailed to a “production release” version of Houdini quite often. I am experiencing a lot of random crashes with Houdini 18.348, that I do not blame on H (SideFX provides daily builds and fixes issues pretty quickly). Yet, RS has been “out of sync” for a few weeks, lagging behind, so I cannot update to the latest production release and I cannot update to a daily build if I want to use Redshift.

I fully realize that SideFX is changing their API rapidly with Houdini 18 and I cannot EXPECT Redshift to follow up on a “daily” base (or, maybe, even weekly). Yet, I do kind of paid for “frequent updates” and I have expressed my slight feeling of being unsettled in the Redshift forum. My comment - along with others, who have also been polite about it, was simply deleted, censored out, washed away.

Also, I filed a bug report with SideFX about a crash in a Lab tool happening with Redshift. They took care of that quickly, as usual, but pointed out that there needs to be a minor fix on Redshift's side as well. SideFX and Redshift don't seem to talk directly to one another, I was asked, kindly, to report the issue to Redshift's development team. They don't have a “bug database”, you have to publish a bug report openly in their forum (which I find highly unusual for a professional tool, but that - again - is just me). They were absolutely reluctand to talk to SideFX about the issue, even though I gave them the bug report ID on SideFX' side. So I had to negotiate the “bug fixing” between two companies, both of which get money from me to continuously update their respective tools to work together. I don't like that. To be fair, Redshift fixed their side of the problem extremely quickly as well. Unfortunately, I cannot use their fix, because they still haven't released the new plugin version that holds that bug fix.

At the end of the day, right now it seems like the two companies are holding some kind of grudge against one another and I simply don't want to be the moderator of that, so next time an update to a license to one of the two tools is immanent, the company that provides constant, frequent updates gets my money. The other does not.

Again: This is a personal, subjective, probably unfair but still honest response. I would never have posted in this form if Redshift hadn't deleted my expression of unease on their forum. They lost my trust.

Marc Albrecht

Houdini Lounge » Group Weight ?

-

- malbrecht

- 806 posts

- Offline

I don't blender, but I think you are talking about the same thing here :-)

A “group” is a group in Houdini. “Weights” are, really, just attributes (as it is called in Houdini and, really, everywhere else). So if you “create an attribute” (using AttributeCreate), you define what group to apply it to, then you can follow it up with a “paint attribute” node - with which you would paint the attributes' values - and call those “weights”.

Marc

A “group” is a group in Houdini. “Weights” are, really, just attributes (as it is called in Houdini and, really, everywhere else). So if you “create an attribute” (using AttributeCreate), you define what group to apply it to, then you can follow it up with a “paint attribute” node - with which you would paint the attributes' values - and call those “weights”.

Marc

Technical Discussion » Confused about how to prepare UV's for Substance Painter etc

-

- malbrecht

- 806 posts

- Offline

Well, it is of course “allowed” to have any value for UV-coordinates. I do remember that many years ago - before UDIM - wrapping of textures sometimes was “baked” into non-normalized UV coordinates.

What I meant above is that today, UVs should be “standardized”, i.e. normalized. If I pay money for data, I expect the data to be “nicely, friendly, usably”

The SF-thing you sent looks like the UV coordinates are scaled and “recentered” (they might have come from some projection pipeline, from the looks of it), so correcting those is pretty straight forward.

I would definitely contact the vendor and ask for a) information on the website about UVs being non-standard and b) if possible a “cleaned” version, again, based on the fact that you paid money for it.

That is, as usual, just a narrow-minded German-grumpy perspective. Your kilometerage may vary.

Marc

What I meant above is that today, UVs should be “standardized”, i.e. normalized. If I pay money for data, I expect the data to be “nicely, friendly, usably”

The SF-thing you sent looks like the UV coordinates are scaled and “recentered” (they might have come from some projection pipeline, from the looks of it), so correcting those is pretty straight forward.

I would definitely contact the vendor and ask for a) information on the website about UVs being non-standard and b) if possible a “cleaned” version, again, based on the fact that you paid money for it.

That is, as usual, just a narrow-minded German-grumpy perspective. Your kilometerage may vary.

Marc

Technical Discussion » Confused about how to prepare UV's for Substance Painter etc

-

- malbrecht

- 806 posts

- Offline

Definitely not a Houdini-problem:

… I wonder if that is a “standard” on those sites you are downloading from. I mean … this isn't exactly something I would pay money for.

Marc

… I wonder if that is a “standard” on those sites you are downloading from. I mean … this isn't exactly something I would pay money for.

Marc

Edited by malbrecht - March 11, 2020 17:22:48

Technical Discussion » Confused about how to prepare UV's for Substance Painter etc

-

- malbrecht

- 806 posts

- Offline

Sounds strange, honestly … are you importing OBJ or another format? OBJ would allow you to simply open the file in a text editor and have a quick peek at the ACTUAL UV data, so you could check if it's a problem in Houdini or with the files.

Do you have an example link where one could download such a “wonky file” without having to register first?

Marc

Do you have an example link where one could download such a “wonky file” without having to register first?

Marc

Houdini Indie and Apprentice » On the topic of dynamic trees (Vellum)

-

- malbrecht

- 806 posts

- Offline

Do you have the scale-by-distance trick set up that is being talked about in the video linked above? From your screenshots it doesn't look like it is (or you need to adjust values).

Marc

Marc

Technical Discussion » Confused about how to prepare UV's for Substance Painter etc

-

- malbrecht

- 806 posts

- Offline

Kays, absolutely! I am not saying that the above method is “the best”, “the only” or even “the suggested one”. I would incorporate whatever else is necessary to deal with the mess at hand into that code anyway - thus probably saving several Houdini-nodes.

I have a baker-dozen of reasons for not using Houdini nodes, one of which is that I use other software for production purpose and Houdini is merely a test-bed for me, so I simply don't have all the nodes at heart and looking up what might be useful takes me MUCH longer than writing down a few lines of code.

Another simple reason is that by writing code that is SIMPLE and does ONE simple job that you can immediately quality-check is that one can learn a lot this way. It doesn't matter if there are “out-of-the-box” solutions available if a dog wants to learn a new trick.

Most of the other reasons have to do with Houdini specifically and this may not be the place to go down there :-D

Marc

I have a baker-dozen of reasons for not using Houdini nodes, one of which is that I use other software for production purpose and Houdini is merely a test-bed for me, so I simply don't have all the nodes at heart and looking up what might be useful takes me MUCH longer than writing down a few lines of code.

Another simple reason is that by writing code that is SIMPLE and does ONE simple job that you can immediately quality-check is that one can learn a lot this way. It doesn't matter if there are “out-of-the-box” solutions available if a dog wants to learn a new trick.

Most of the other reasons have to do with Houdini specifically and this may not be the place to go down there :-D

Marc

Technical Discussion » Confused about how to prepare UV's for Substance Painter etc

-

- malbrecht

- 806 posts

- Offline

Hi, Kays,

here's a simplified and over-exaggerated complicated (sorry, I ate a clown) example. I split the workflow up into several steps:

Have a look at each node in the UV layout.

By adding an offset (1, 2, 3 …) to the x and y component of the UV (index 0 and 1) you can “UDIM-ize” the UV coordinates. I am not sure if Houdini supports 3-dimensional UV coordinates (it uses a 3-component vector), I'd just ignore the third channel.

Marc

here's a simplified and over-exaggerated complicated (sorry, I ate a clown) example. I split the workflow up into several steps:

- Create a circle (obvious)

- create tris

- add UV

- randomize UV scale all across the place

- attribute promote to a single detail with minimum and maximum used UV (this could be put into a wrangle as well, but it might be faster this way)

- last wrangle moves the UV coordinate to 0 if minimum is not 0, calculates the “scale” (the maximum range per axis) and normalizes the coordinate. This could (and should) be simplified, could be done in one or two lines, but hopefully this “long winded” version is helpful in stepping beyond modifying “P” :-D

Have a look at each node in the UV layout.

By adding an offset (1, 2, 3 …) to the x and y component of the UV (index 0 and 1) you can “UDIM-ize” the UV coordinates. I am not sure if Houdini supports 3-dimensional UV coordinates (it uses a 3-component vector), I'd just ignore the third channel.

Marc

Technical Discussion » Confused about how to prepare UV's for Substance Painter etc

-

- malbrecht

- 806 posts

- Offline

Hi, Kays,

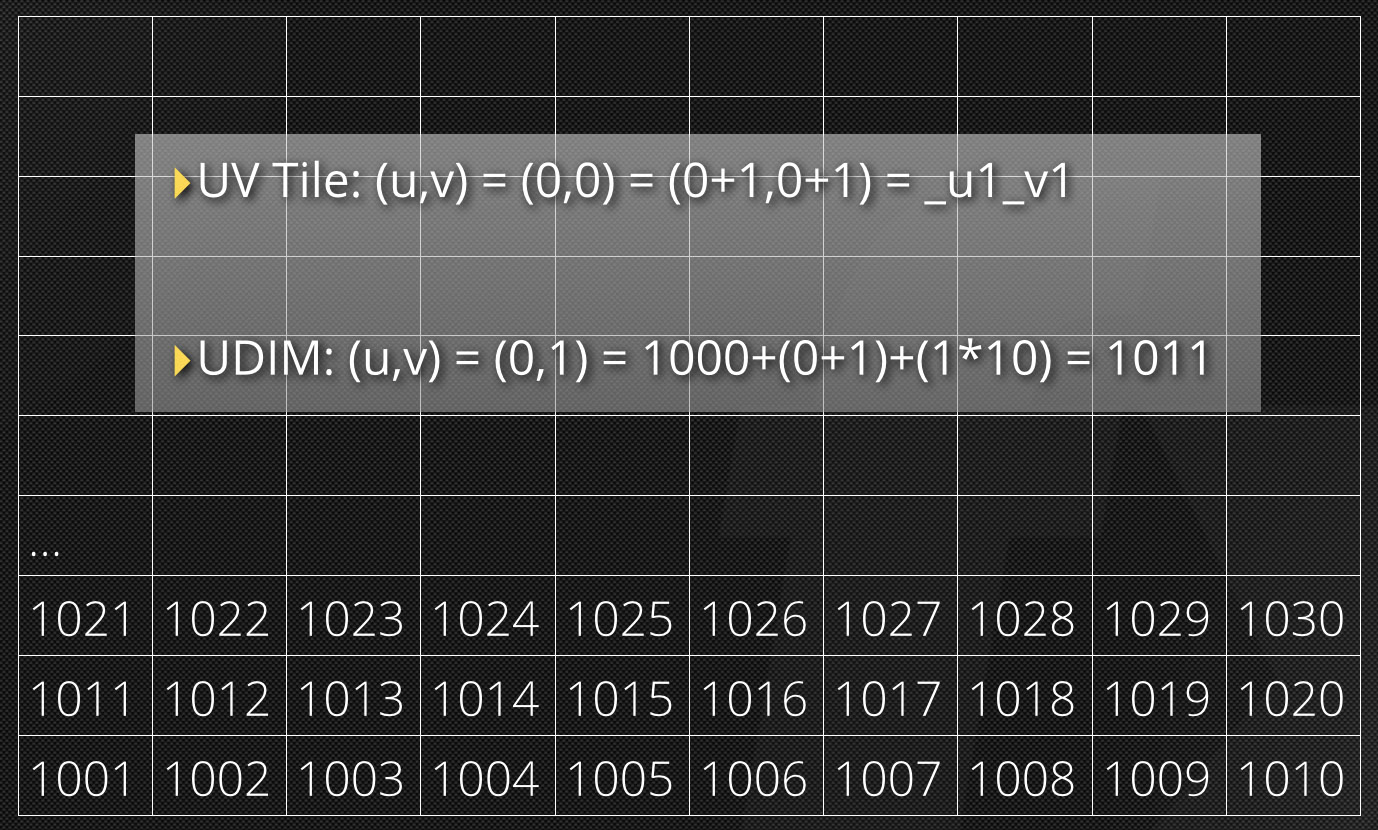

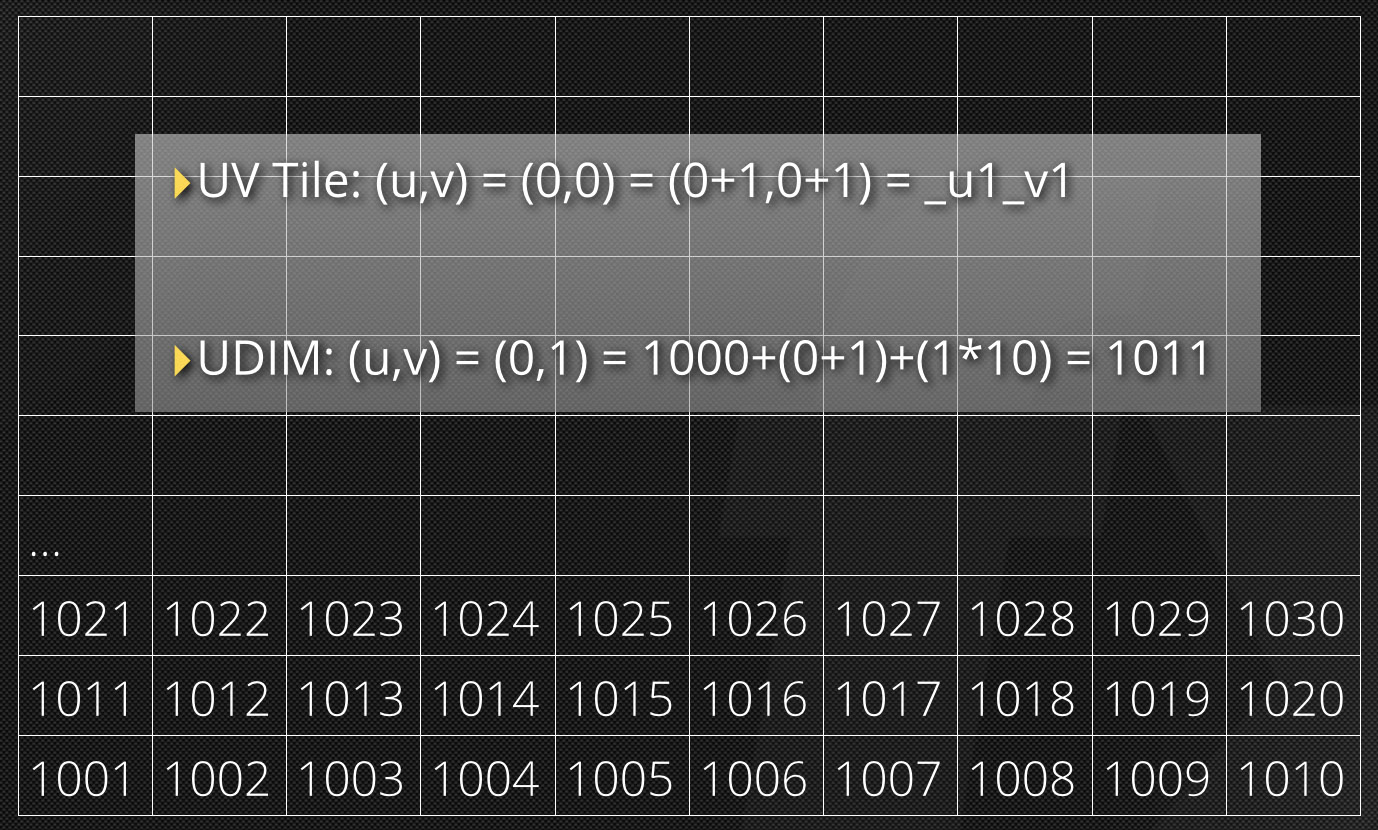

UV space is 0-1. A UDIM layout ranges from 0 to 9 for x (in the UV vector's x component) and 0 to 99 in y. .

.

Houdini has too many nodes for me, so I just write a VEX wrapper that runs over the UV values and corrects them. It's quite possible that I am reinventing the wheel every time I use Houdini, but my guts say that's still faster than looking up the “best node”

What you could do with above scenario is run a VEX over all UV data and store min/max values into a detail attribute. Then run a second VEX that takes that min/max and scales all UV values accordingly. That would bring all UV values into 0-1. Then you'd look up which material or shop_path or group each component you are running over belongs to and offset x/y accordingly.

For example:

You have 10 materials and your VEX already took care of all UV values being within 0-1.

You'd then look up each component's (point's, vertex') “membership” for group or material (depending on what your classification for grouping is) from a list/an array. You'd add the array's index to the x-component of the UV coordinate, which, with 10 materials, would fit nicely (going from 0-9 for the integer part of the x then).

If you have got more than 10 materials, you'd add a modulo 10 for the y-component - et voila, UDIMs.

I hope this helps. If you could prep a simple test-file, I'd quick'n dirty hack a wrangle-soup into it :-)

Marc

How do you add UDIM offsets?

UV space is 0-1. A UDIM layout ranges from 0 to 9 for x (in the UV vector's x component) and 0 to 99 in y.

.

.Houdini has too many nodes for me, so I just write a VEX wrapper that runs over the UV values and corrects them. It's quite possible that I am reinventing the wheel every time I use Houdini, but my guts say that's still faster than looking up the “best node”

What you could do with above scenario is run a VEX over all UV data and store min/max values into a detail attribute. Then run a second VEX that takes that min/max and scales all UV values accordingly. That would bring all UV values into 0-1. Then you'd look up which material or shop_path or group each component you are running over belongs to and offset x/y accordingly.

For example:

You have 10 materials and your VEX already took care of all UV values being within 0-1.

You'd then look up each component's (point's, vertex') “membership” for group or material (depending on what your classification for grouping is) from a list/an array. You'd add the array's index to the x-component of the UV coordinate, which, with 10 materials, would fit nicely (going from 0-9 for the integer part of the x then).

If you have got more than 10 materials, you'd add a modulo 10 for the y-component - et voila, UDIMs.

I hope this helps. If you could prep a simple test-file, I'd quick'n dirty hack a wrangle-soup into it :-)

Marc

Edited by malbrecht - March 9, 2020 13:57:41

Technical Discussion » Confused about how to prepare UV's for Substance Painter etc

-

- malbrecht

- 806 posts

- Offline

Hi,

I have only just revisited current documentation for Substance Painter (it used to be too slow and too limited in UDIM workflows in the past), but from my understanding, there are two issues:

- Substance Painter, even though it can now deal with UDIMs to a very limited extent, cannot use patches ACROSS UDIMs (which shouldn't be done anyway). So the layouts you showed above won't work anyway.

- UV space is 0 to 1. If one tile you are importing is going beyond that - UDIM offset aside - either the import has gone wrong or the model is broken.

I deal a lot with more or less broken UV/UDIM data. The first thing I usually do is make sure that within a material group (primitive attribute, usually) UV space is within 0 to 1 (maybe with a UDIM offset). Since most of the time this leads to materials with the same shop path overlapping, I separate those by simply adding UDIM offsets “per material”. That “splits” materials across several UDIM patches, cleaning things up neatly.

Marc

Edit/P.S. “Dealing with” means: Simply gather the min/max boundary of a UV area and scale all positions accordingly.

I have only just revisited current documentation for Substance Painter (it used to be too slow and too limited in UDIM workflows in the past), but from my understanding, there are two issues:

- Substance Painter, even though it can now deal with UDIMs to a very limited extent, cannot use patches ACROSS UDIMs (which shouldn't be done anyway). So the layouts you showed above won't work anyway.

- UV space is 0 to 1. If one tile you are importing is going beyond that - UDIM offset aside - either the import has gone wrong or the model is broken.

I deal a lot with more or less broken UV/UDIM data. The first thing I usually do is make sure that within a material group (primitive attribute, usually) UV space is within 0 to 1 (maybe with a UDIM offset). Since most of the time this leads to materials with the same shop path overlapping, I separate those by simply adding UDIM offsets “per material”. That “splits” materials across several UDIM patches, cleaning things up neatly.

Marc

Edit/P.S. “Dealing with” means: Simply gather the min/max boundary of a UV area and scale all positions accordingly.

Edited by malbrecht - March 9, 2020 10:45:57

3rd Party » WIP: FBX HD & SD importer, Joints to Bones Converter and Morph-Helper (was: DAZ to Houdini converter)

-

- malbrecht

- 806 posts

- Offline

Hi, “Robot”(?),

thanks for the links!

(My experience with the DAZ community was … mixed, put mildly. Not my crowd, I fear. Too much of a “it may not cost anything else it isn't worth my time” mentality. I prefer the Houdini “crowd”, even if it has been heading in the same direction lately :-) )

I did have a look at that and was considering that in the beginning. My hope was that the HDA could serve as a “more or less general purpose” rig importer (it does, to some extent).

But, yes, issues like shoulders etc. are probably better served using original rigging information. Not that the DAZ rig would be extraordinary good in that respect, in the end an artist ends up putting in manual work anyway … that was what pushed me into “redoing it” in the first place.

For the moment I am concentrating on non-DAZ-specific features, mainly because the whole “blendshape/morph/rig” mix - although it does have its benefits (see above) - feels way too chaotic for my taste. I am thinking about applying a “face rig” that can be driven (and frozen) from imported morphs (blendshapes), so that you could use a “real rig” instead of morphs for facial expressions. But that is a LOT of work … I don't need that myself, really. Just one of a couple of ideas what one COULD do …

Thanks again for the response!

Marc

thanks for the links!

(My experience with the DAZ community was … mixed, put mildly. Not my crowd, I fear. Too much of a “it may not cost anything else it isn't worth my time” mentality. I prefer the Houdini “crowd”, even if it has been heading in the same direction lately :-) )

I don't know if you're aware, but if you run into the limitations of the FBX exporter in DAZ Studio, you could skip FBX and read the native DAZ files and import them and the rigging information into Houdini directly.

I did have a look at that and was considering that in the beginning. My hope was that the HDA could serve as a “more or less general purpose” rig importer (it does, to some extent).

But, yes, issues like shoulders etc. are probably better served using original rigging information. Not that the DAZ rig would be extraordinary good in that respect, in the end an artist ends up putting in manual work anyway … that was what pushed me into “redoing it” in the first place.

For the moment I am concentrating on non-DAZ-specific features, mainly because the whole “blendshape/morph/rig” mix - although it does have its benefits (see above) - feels way too chaotic for my taste. I am thinking about applying a “face rig” that can be driven (and frozen) from imported morphs (blendshapes), so that you could use a “real rig” instead of morphs for facial expressions. But that is a LOT of work … I don't need that myself, really. Just one of a couple of ideas what one COULD do …

Thanks again for the response!

Marc

3rd Party » WIP: FBX HD & SD importer, Joints to Bones Converter and Morph-Helper (was: DAZ to Houdini converter)

-

- malbrecht

- 806 posts

- Offline

Hi, Floran,

thanks for the feedback - very much appreciated!

Unfortunately, you do not get the hires mesh with the FBX workflow, but you do get the morphs exported in there (“blendshapes”), which is huge benefit for there are tons of ready-to-use expressions.

So what you need to do is convert the lo-res morphs to hires morphs (UV based Attrib Transfer on P using Vertex UV). You also need to take care of material conversion, and some overlapping areas. If you can invest the time, as you mention, creating an individual rig definitely beats any automatism. However, if you need to convert a lot of characters in short time, a script comes in handy

The biggest disadvantage of the automatism is that the biharmonic capture doesn't deal very well with shoulders, elbows, neck, feet. You either have to hand-tweak those areas or (which is what I am thinking about right now) convert the original DAZ weights. I wanted to get around that because I had lots of Houdini crashes when trying to work with FBX-embedded point data.

Marc

thanks for the feedback - very much appreciated!

Unfortunately, you do not get the hires mesh with the FBX workflow, but you do get the morphs exported in there (“blendshapes”), which is huge benefit for there are tons of ready-to-use expressions.

So what you need to do is convert the lo-res morphs to hires morphs (UV based Attrib Transfer on P using Vertex UV). You also need to take care of material conversion, and some overlapping areas. If you can invest the time, as you mention, creating an individual rig definitely beats any automatism. However, if you need to convert a lot of characters in short time, a script comes in handy

The biggest disadvantage of the automatism is that the biharmonic capture doesn't deal very well with shoulders, elbows, neck, feet. You either have to hand-tweak those areas or (which is what I am thinking about right now) convert the original DAZ weights. I wanted to get around that because I had lots of Houdini crashes when trying to work with FBX-embedded point data.

Marc

Technical Discussion » Unresolved external symbol while building command.c

-

- malbrecht

- 806 posts

- Offline

ah … looks reasonable! So it *was* a missing linked library after all :-)

Thanks for publishing your solution, that will help others running into the same wall!

Marc

Thanks for publishing your solution, that will help others running into the same wall!

Marc

Houdini Indie and Apprentice » further animate Alembic Files in Houdini?

-

- malbrecht

- 806 posts

- Offline

Moin,

you need to give more information: Are the cubes exported (and imported) as individual objects or as one huge chunk? Do they have any ID on them so that you could split the geometry into separate objects?

For a simulation to work the way you hint at, each cube has to be separate geometry. If you treat the whole geometry you imported from the ABC as one chunk, your simulation cannot do anything else but follow your lead. Either you split the content up in Houdini - or you export as individual objects to begin with.

Maybe you could link a test file with reduced geometry (two cubes?) …

Marc

(ex-modo-user)

—

Disclaimer: If I should come across as rude, it's because 99 times out of 100 1-time-posters (and 2-time-posters, too) never respond and I moan the loss of life-time for every attempt to help.

you need to give more information: Are the cubes exported (and imported) as individual objects or as one huge chunk? Do they have any ID on them so that you could split the geometry into separate objects?

For a simulation to work the way you hint at, each cube has to be separate geometry. If you treat the whole geometry you imported from the ABC as one chunk, your simulation cannot do anything else but follow your lead. Either you split the content up in Houdini - or you export as individual objects to begin with.

Maybe you could link a test file with reduced geometry (two cubes?) …

Marc

(ex-modo-user)

—

Disclaimer: If I should come across as rude, it's because 99 times out of 100 1-time-posters (and 2-time-posters, too) never respond and I moan the loss of life-time for every attempt to help.

Technical Discussion » Unresolved external symbol while building command.c

-

- malbrecht

- 806 posts

- Offline

Hi,

the one thing popping out immediately is:

Obviously, if you create the missing object (a CMD_Manager) yourself, the missing object from a *.lib won't matter, but you don't know if the object is getting filled (e.g. from an overloaded instance), so if your compiled version with the “hack” doesn't work, you might need to check what else gets linked in by default when you use hcustom. Docs say, that “-e” lists the current configuration:

But, again, my suspicion would be that something about your Qt config is different to what hcustom uses …

Apologies for not being able to pinpoint it, consider my response “moral support” :-)

Marc

the one thing popping out immediately is:

The kit Desktop Qt 5.14.1 MSVC2017 64bit has configuration issues which might be the root cause for this problem

Obviously, if you create the missing object (a CMD_Manager) yourself, the missing object from a *.lib won't matter, but you don't know if the object is getting filled (e.g. from an overloaded instance), so if your compiled version with the “hack” doesn't work, you might need to check what else gets linked in by default when you use hcustom. Docs say, that “-e” lists the current configuration:

-e Echo the compiler and linker commands run by this script.(this is for hcustom), maybe you give that a try and check if you are missing a static .lib that would normally be linked?

But, again, my suspicion would be that something about your Qt config is different to what hcustom uses …

Apologies for not being able to pinpoint it, consider my response “moral support” :-)

Marc

-

- Quick Links