Deecue's file prompted me to dive into Houdini's global illumination again.

In my humble opinion, there are some limitations in the GI implementation here that puts the quality of renders behind the output of other GI capable renderers, such as Brazil or Vray for example (in terms of realism at least). I'll try to go into more detail.

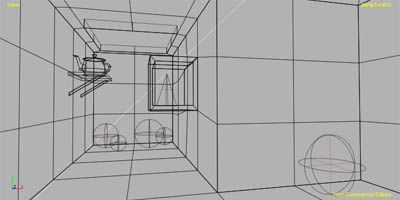

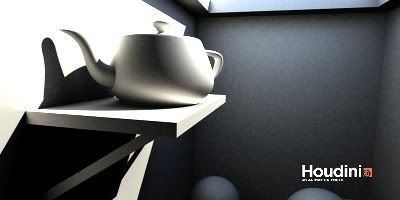

Take this scenario: A room, with a window or skylight. The only source of illumination can be sunlight and/or hemispheric light (ambient occlusion) from outside. Here is such a scene modeled in Houdini:

The expectation here is that, in real life, if the sun is shining directly into the room, there ought to be enough light bouncing around to illuminate the entire space. I think most would agree that this is an accurate assumption.

Now, any GI implementation worth its salt should be able to solve this if you ask me. There are going to be various degrees of “accuracy” depending on the renderer used, as they all do things a bit differently (the most physically correct being Maxwell I suppose), but generally they should at least be able to give you a solution that approaches the behavior of real light.

There are two ways of doing GI in HDN at the moment: Irradiance and Photon Mapping.

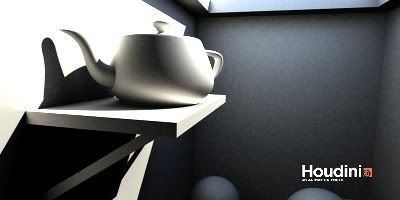

I first tried Full Irradiance with Background Color (direct light + occlusion). The sunlight hits the wall on the left and bounces the light once.

Now right off the bat, we've hit a problem. After our light has bounced once, the Irradiance method has exhausted itself. There is no more light to go around. No amount of adjusting Global Tint or light intensity will make that sphere in the bottom right of the frame visible. Notice that the shadow cast by the shelf is not illuminated by any bounced light.

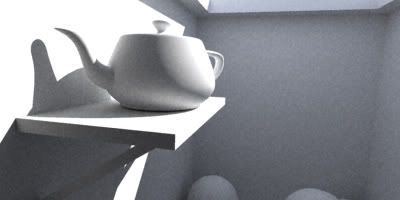

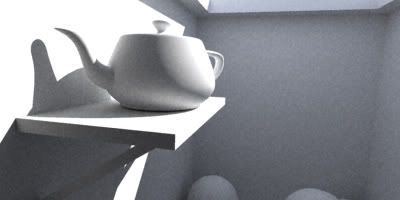

Here is the same scene in 3ds max, using Brazil. It's set to use 7 bounces.

Say what you want about the artistic merit of the two renders, but there is no doubt that the Brazil version shows more realistic light transport. I wonder why Full Irradiance is limited to one bounce. It seems to me that it's doing the same thing as the quasi monte-carlo algorythms in many other GI renderers, but then again, I don't know the first thing about the nitty-gritty details of GI rendering.

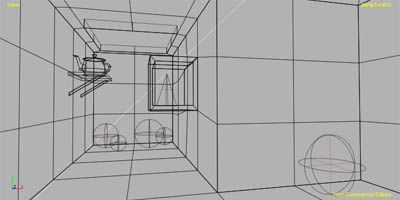

Same scene, different angle:

“But Juice, what about Photon Mapping?”

Ok, where to start…

One problem that pbowmar already pointed out: There is no way to “aim” the photons. We're trying to simulate sunlight here. That means our light is not actually inside our space. As a result, only a fraction of the total number of photons seem to make it into the interior. I think the only thing you can do is to bring the light really close to the point of entry. The problem is, now you have a situation where your solution looks completely different depending on how far/close you've placed your light. How would this integrate into scenes where you need to be able see this interior as well as the outside? I'm not saying it's not workable, but it does create more problems. Not exactly robust or predictable.

Also in my test rendering last night it seemed like changing the number of photons significantly changed the look of the solution, and I don't mean less photons looked crude, while more photons looked refined.

The controls for the photon shader are pretty confounding too, I didn't find them very intuitive. All in all I found it very difficult to get predictable or good looking results with this scene using photon maps, and none of my renders looked remotely “correct”. Something else - using both Irradiance and Photon Mapping combined was much slower than using them seperately.

I've uploaded the file in case anyone wants a shot at it.

http://www.pixelheretic.com/misc/GI_test.zip [

pixelheretic.com]

Finally, I'm sorry if my post sounds a bit harsh. Just putting it out there that this is an area where Houdini can be improved.

i've actually only used photon mapping for caustics as well.. but do realize the difference (whether its visual in this particular case or not).. as mentioned earlier, it allows for multiple bounces as opposed to a single one which can be useful at times.. also, each of your objects can have a specifically designed photon shader attached to it. each with a level of control (in the case of the vex photon plastic shader: diffuse, specular, and transmission as well as probabilties for each).. this will give you more control on how each object receives and casts the photons.. will you always need this level of control? obviously not as you found out the looks can be quite similar.. but still nice to have if you did find it to be more accurate, realistic, etc.. also, check out the irradiance caching (explained in peters vid tut..) that will help you with shortening up the rendering times as well..

i've actually only used photon mapping for caustics as well.. but do realize the difference (whether its visual in this particular case or not).. as mentioned earlier, it allows for multiple bounces as opposed to a single one which can be useful at times.. also, each of your objects can have a specifically designed photon shader attached to it. each with a level of control (in the case of the vex photon plastic shader: diffuse, specular, and transmission as well as probabilties for each).. this will give you more control on how each object receives and casts the photons.. will you always need this level of control? obviously not as you found out the looks can be quite similar.. but still nice to have if you did find it to be more accurate, realistic, etc.. also, check out the irradiance caching (explained in peters vid tut..) that will help you with shortening up the rendering times as well..

.

.