| On this page | |

| Since | 17.5 |

Overview ¶

This scheduler node utilizes Thinkbox’s Deadline to schedule and execute PDG work items on a Deadline farm.

To use this scheduler, you need to have the Deadline client installed and working on your local machine. Additionally, you must also have Deadline set-up on your farm machines to receive and execute jobs.

Note

The Deadline Scheduler TOP supports Deadline 10 and greater.

It is recommended that Deadline 10.1.17.4 or greater is used. Version 10.1.17.4 released a fix for a regression introduced in Deadline 10.1.15.X that broke job submissions from PDG.

Scheduling ¶

This node can do two types of scheduling using a Deadline farm:

-

The first type of scheduling runs an instance of Houdini on a local submission machine that coordinates the PDG cook on the farm and waits until the cook has completed. This allows you to cook the entire TOP graph, only part of the TOP graph, or a specific node.

With this scheduling type, the node schedules a job containing many tasks, each task scheduled for a generated work item, and if necessary, schedules a second Message Queue (MQ) job to run the MQ server that receives the work item results over the network. For more information on MQ, see the Message Queue section below.

If the Schedule Work Items as Jobs parameter is checked on, then the node schedules jobs for the generated work items, rather than tasks, with each job containing exactly one task. The node also schedules an additional Message Queue (MQ) job if necessary. Please see the Schedule Work Items as Jobs documentation to learn about when you may want to schedule work items as jobs instead of as tasks.

-

The second type of scheduling uses Submit as Job.

With this scheduling type, the node schedules a hython session that opens up the current

.hipfile and cooks the entire TOP network. If the TOP network uses the Deadline scheduler node(s), then additional jobs might also be scheduled on the farm, and those jobs will follow the scheduling behavior mentioned above.

PDGDeadline plugin ¶

By default, this Deadline scheduler requires and uses the custom PDGDeadline plugin (located in $HFS/houdini/pdg/plugins/PDGDeadline) that ships with Houdini. You do not have to set-up the plugin to enable it as it should work out-of-the-box. Please note that the rest of this documentation page assumes that you are using the PDGDeadline plugin.

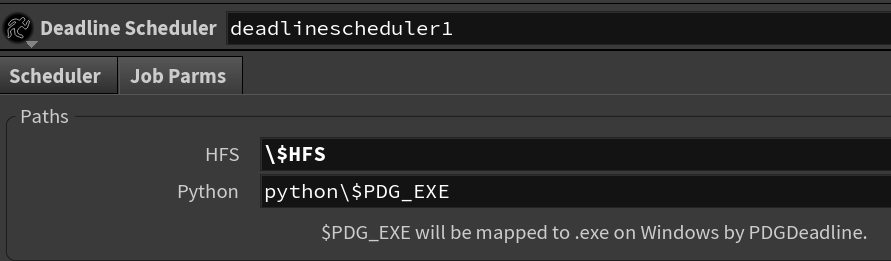

On Windows, Deadline processes require executables to have the .exe suffix. To meet this requirement, you can append \$PDG_EXE to executables.

The PDGDeadline plugin evaluates the \$PDG_EXE specified in work item executables as follows:

Windows

$PDG_EXE is replaced by .exe.

For example, hython will be framed between $HFS and \$PDG_EXE and evaluated on Windows as:

\$HFS/bin/hython\$PDG_EXE → C:/Program Files/Side Effects Software/Houdini 17.5.173/bin/hython.exe

Mac

$PDG_EXE is removed.

Linux

$PDG_EXE is removed.

If you set the PATH environment in a work item, then the path separators in the task’s specification file are replaced with __PDG_PATHSEP__ to support cross-platform farms. And when you run that task on the farm, the PDGDeadline plugin converts __PDG_PATHSEP__ to the local OS path separator. Please note though, if the work item’s PATHis set, then it will override the local machine’s PATH environment.

Installation ¶

-

Install the Deadline client on the machine from which you will cook the TOP network. Refer to Thinkbox’s instructions for how to install Deadline on each platform.

-

Make sure of the following:

-

The

deadlinecommandexecutable is working. See the$DEADLINE_PATHnote below. -

The Deadline repository is accessible on the machine where the TOPs network will cook (either the repository is local, or the network mount/share it’s on is available locally).

-

If you are using a mixed farm set-up (for example, a farm with any combination of Linux, macOS, and Windows machines), then set the following path mapping for each OS:

-

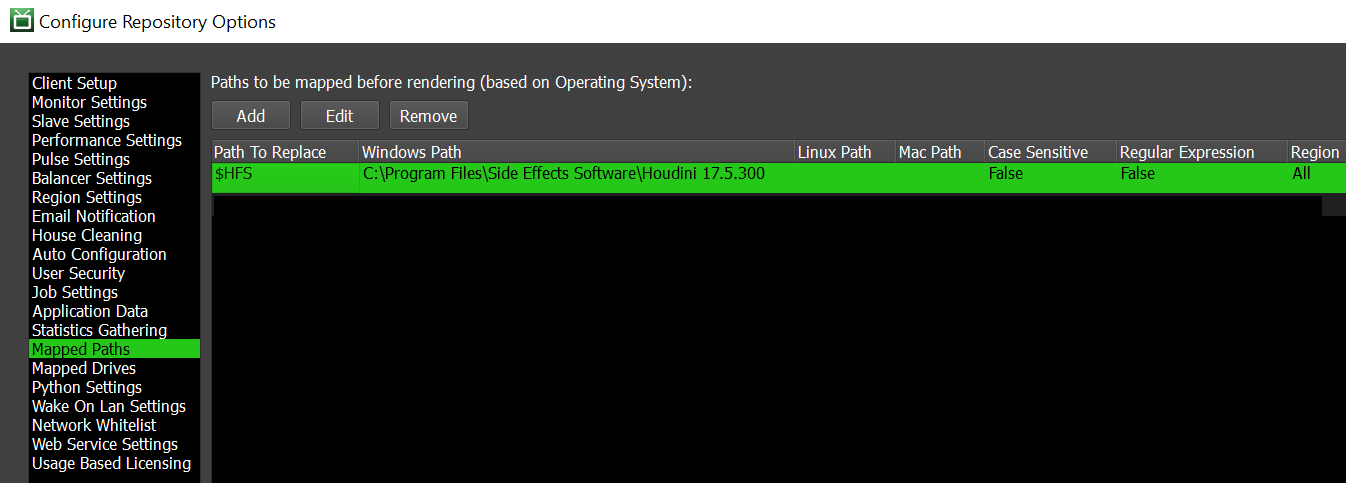

Navigate to Deadline’s

Configure Repository Options>Mapped Paths. -

On Windows:

-

Set up path mapping for

$HFSto the Houdini install directory on Deadline Worker machines. -

Set up path mapping for

$PYTHONto<expanded HFS path>/bin/python.exeor your local Python installation.

-

-

On macOS and Linux:

-

Set up path mapping for

$HFSto the Houdini install director, or override the default parm values for Hython and Python in the Job Parms interface.

Use

\in front of$HFSto escape Houdini’s local evaluation. Using\$HFSmakes sure that the node evaluates the variable on the farm machine running the job.

-

Repeat for any other variable that needs to be evaluated on the farm machine.

-

-

-

-

Set the

$DEADLINE_PATHvariable to point to the Deadline installation directory.-

If

DEADLINE_PATHis not set:-

You can add the Deadline installation directory to the system path.

-

On macOS, the node falls back to checking the standard Deadline install directory.

-

-

TOP Attributes ¶

|

|

string |

When the schedule submits a work item to Deadline, it adds this attribute to the work item in order to track the Deadline job and task IDs. |

Parameters ¶

Scheduler ¶

Global parameters for all work items.

Verbose Logging

When on, information is printed out to the console that could be useful for debugging problems during cooking.

Block on Failed Work Items

When on, if there are any failed work items on the scheduler, then the cook is blocked from completing and the PDG graph cook is prevented from ending. This allows you to manually retry your failed work items. You can cancel the scheduler’s cook when it is blocked by failed work items by pressing the ESC key, clicking the Cancels the current cook button in the TOP tasks bar, or by using the cancel API method.

Limit Jobs

When enabled, sets the maximum number of jobs that can be submitted by the scheduler at the same time.

For farm schedulers like Tractor or HQueue, this parameter can be used to limit the total number of jobs submitted to the render farm itself. Setting this parameter can help limit the load on the render farm, especially when the PDG graph has a large number of small tasks.

Ignore Command Exit Code

When on, Deadline ignores the exit codes of tasks so that they always succeed, even if the tasks return non-zero exit codes (like error codes).

Force Reload Plugin

When on, Deadline reloads the plugin between frames of a job. This can help you deal with memory leaks or applications that do not unload all job aspects properly.

By default, Force Reload Plugin is off.

Task File Timeout

The maximum number of milliseconds that Deadline will wait for the task file to appear. If the timeout is reached, then the task will fail.

Monitor Machine Name

Specifies the name of the machine to launch the Deadline Monitor on when jobs are scheduled.

Schedule Work Items as Jobs

The Deadline Scheduler schedules work items as tasks organized under a single job by default. This scheduling mode requires Deadline to append tasks to an active job, which can trigger a known race condition in Deadline when there is high activity on the farm. The race condition can cause Deadline to drop tasks submitted by the Deadline Scheduler TOP node.

To workaround issues with dropped tasks, you can check on the Schedule Work Items as Jobs parameter. This will cause the Deadline Scheduler to schedule work items as jobs organized under a single job batch, with each job containing exactly one task.

Note that a few Deadline Scheduler parameters no longer apply when scheduling work items as jobs:

-

The Task Submit Batch Max and Tasks Check Batch Max parameters do not apply. Use the Job Submit Batch Max and Jobs Check Batch Max parameters instead.

-

The Pre Job Script and Post Job Script parameters do not apply. Use the Pre Batch Script and Post Batch Script parameters instead.

-

The Concurrent Tasks and Limit Concurent Tasks to CPUs parameters do not apply and do not have suitable replacements.

Job Submit Batch Max

When Schedule Work Items as Jobs is checked on, this parameter sets the maximum number of jobs that can be submitted at a time to Deadline. Increase this value to submit more jobs, or decrease this value if your number of jobs are affecting UI performance in Houdini.

Jobs Check Batch Max

When Schedule Work Items as Jobs is checked on, this parameter sets the maximum number of jobs that can be checked in at a time in Deadline. Increase this value to check more jobs, or decrease this value if your number of jobs are affecting UI performance in Houdini.

Task Submit Batch Max

When Schedule Work Items as Jobs is checked off, this parameter sets the maximum number of tasks that can be submitted at a time to Deadline. Increase this value to submit more tasks, or decrease this value if your number of tasks are affecting UI performance in Houdini.

Tasks Check Batch Max

When Schedule Work Items as Jobs is checked off, this parameter sets the maximum number of tasks that can be checked in at a time in Deadline. Increase this value to check more tasks, or decrease this value if your number of tasks are affecting UI performance in Houdini.

Repository Path

When on, overrides the system default Deadline repository with the one you specify.

If you have a single Deadline repository or you want to use your system’s default Deadline Repository, you should leave this field empty. Otherwise, you can specify another Deadline repository to use, along with SSL credentials if required.

Connection Type

The type of connection to the repository.

Direct

Lets you specify the path to the mounted directory. For example: //testserver.com/DeadlineRepository.

Proxy

Lets you specify the URL to the repository along with the port and login information.

Plugin

When on, allows you to specify a custom Deadline plugin to execute each PDG work item. When off, the PDGDeadline plugin that ships with Houdini is used.

Do not turn on this parameter unless you have written a custom Deadline plugin that supports the PDG cooking process. The other plugins shipped with Deadline will not work out-of-the-box.

If you want to control the execution of the PDG work items' processes, then you can write a custom Deadline plugin and specify it here along with the Plugin Directory below. The custom plugin must utilize the task files written out for each work item, and set the evaluated environment variables in the process. For reference, please see the PDGDeadline.py.

Plugin Directory

Specifies the path to the custom Deadline plugin listed in the Plugin parameter field.

This parameter is only available when Plugin is turned on.

Copy Plugin to Working Directory

When on, copies the Deadline plugin files from the local Houdini installation or specified custom path to the PDG working directory so that farm machines can access them.

Do not turn on this parameter if you are using an override path and the plugin is already available on the farm.

Local Shared Path

Specifies the root path on your local machine that points to the directory where the job generates intermediate files and output. The intermediate files are placed in a subdirectory.

Use this parameter if your local and farm machines have the same path configuration.

Load Item Data From

Determines how jobs processed by this scheduler should load work item attributes and data.

Temporary JSON File

The scheduler writes out a .json file for each work item to the PDG temporary file directory. This option is selected by default.

RPC Message

The scheduler’s running work items request attributes and data over RPC. If the scheduler is a farm scheduler, then the job scripts running on the farm will also request item data from the submitter when creating their out-of-process work item objects.

This parameter option removes the need to write data files to disk and is useful when your local and remote machines do not share a file system.

Delete Temp Dir

Determines when PDG should automatically delete the temporary file directory associated with the scheduler.

Never

PDG never automatically deletes the temp file directory.

When Scheduler is Deleted

PDG automatically deletes the temp file directory when the scheduler is deleted or when Houdini is closed.

When Cook Completes

PDG automatically deletes the temp file directory each time a cook completes.

Compress Work Item Data

When on, PDG compresses the work item .json files when writing them to disk.

This parameter is only available when Load Item Data From is set to Temporary JSON File.

Validate Outputs When Recooking

When on, PDG validates the output files of the scheduler’s cooked work items when the graph is recooked to see if the files still exist on disk. Work items that are missing output files are then automatically dirtied and cooked again. If any work items are dirtied by parameter changes, then their cache files are also automatically invalidated. Validate Outputs When Recooking is on by default.

Check Expected Outputs on Disk

When on, PDG looks for any unexpected outputs (for example, like outputs that can result from custom output handling internal logic) that were not explicitly reported when the scheduler’s work items finished cooking. This check occurs immediately after the scheduler marks work items as cooked, and expected outputs that were reported normally are not checked. If PDG finds any files that are different from the expected outputs, then they are automatically added as real output files.

Path Mapping

Global

If the PDG Path Map exists, then it is applied to file paths.

None

Delocalizes paths using the PDG_DIR token.

Path Map Zone

When on, specifies a custom mapping zone to apply to all jobs executed by this scheduler. Otherwise, the local platforms are LINUX, MAC or WIN.

Job Spec ¶

Job description properties that will be written to a Deadline job file.

Batch Name

(Optional) Specifies the batch name under which to group the job.

Job Name

(Required) Specifies the name of the job.

Comment

(Optional) Specifies the comment to add to all jobs.

Job Department

(Optional) Specifies the default department (for example, Lighting) for all jobs. This allows you to group jobs together and provide information to the farm operator.

Pool

Specifies a named pool to use to execute the job.

By default, Pool is none.

Group

Specifies a named group to use to execute the job.

By default, Group is none.

Priority

Specifies the priority for all new jobs. The minimum value is 0 and the maximum value is a setting from Deadline’s repository options (usually 100).

By default, Priority is 50.

Concurrent Tasks

When Schedule Work Items as Jobs is checked off, this parameter specifies the number of tasks to run simultaneously for each Deadline Worker.

By default, Concurrent Tasks is 1 (one task at a time).

Limit Concurrent Tasks to CPUs

When Schedule Work Items as Jobs is checked off, this parameter limits the number of concurrent tasks to the number of CPUs on the Deadline Worker or the current CPU Affinity settings in Deadline when checked on.

Machine Limit

Specifies the maximum number of Deadline Worker machines that can execute this job.

By default, Machine Limit is 0 (no limit).

Machine List

Specifies a restricted list of Deadline Workers that can execute this job. The kind of list that is written out is determined by the Machine List is A Blacklist parameter below.

If the Machine List is A Blacklist parameter is off, the list is written out as Whitelist in the job info file. If Machine List is A Blacklist is on, it is written out as Blacklist.

Machine List is a Blacklist

When on, the Machine List is written out as Blacklist. This means that the listed machines are not allowed to execute this job. When off, only the machines in the list are allowed to execute this job.

Limits

Specifies the Deadline Limits (Resource or License type) required for the scheduled job. The limits are created and managed through the Deadline Monitor in Deadline.

On Job Complete

Determines what happens to with the job’s information when it finishes.

By default, On Job Complete is Nothing.

For more information, see Deadline’s documentation.

Job Key-Values

Lets you add custom key-value options for this job. These are written out to the job file required by the Deadline plugin.

Plugin File Key-Values

Lets you add custom key-value options for the plug-in. These are written out to the plugin file required by the Deadline plugin.

Submit as Job ¶

Allows you to schedule a standalone job (a job that does not require Houdini to be running) on your Deadline farm that cooks the current TOP network.

The parameters below determine the behavior of this job.

Submit

Cooks the current TOP network as a standalone job in a hython session on your Deadline farm.

Job Name

Specifies the name to use for the job.

Job Verbosity

Specifies the verbosity level of the standalone job.

Output Node

When on, specifies the path to the node to cook. If a node is not specified, the display node of the network that contains the scheduler is cooked instead.

Save Task Graph File

When on, the submitted job will save a task graph .py file once the cook completes.

Use MQ Job Options

When on, the node uses the job settings specified by the MQ Job Options parameters.

Enable Server

When on, turns on the data layer server for the TOP job that will cook on the farm. This allows PilotPDG or other WebSocket clients to connect to the cooking job remotely to view the state of PDG.

Server Port

Determines which server port to use for the data layer server.

This parameter is only available when Enable Server is on.

Automatic

A free TCP port to use for the data layer server chosen by the node.

Custom

A custom TCP port to use for the data layer server specified by the user.

This is useful when there is a firewall between the farm machine and the monitoring machine.

Auto Connect

When on, the scheduler will try to send a command to create a remote visualizer when the job starts. If successful, then a remote graph is created and is automatically connected to the server executing the job. The client submitting the job must be visible to the server running the job or the connection will fail.

This parameter is only available when Enable Server is on.

Remote Graph Name

The name of the Remote Graph visualizer node created when Auto Connect is enabled.

Message Queue ¶

The Message Queue (MQ) server is required to get work item results from the jobs running on the farm. Several types of MQ are provided to work around networking issues such as firewalls.

Type

The type of Message Queue (MQ) server to use.

Local

Starts or shares the MQ server on your local machine.

If another Deadline scheduler node (in the current Houdini session) already started a MQ server locally, then this scheduler node uses that MQ server automatically.

If there are not any firewalls between your local machine and the farm machines, then we recommend you use this parameter.

Farm

Starts or shares the MQ server on the farm as a separate job.

The MQ Job Options allow you to specify the job settings.

If another Deadline scheduler node (in the current Houdini session) already started a MQ server on the farm, then this scheduler node uses that MQ server automatically.

If there are firewalls between your local machine and the farm machines, then we recommend you use this parameter.

Connect

Connects to an already running MQ server.

The MQ server needs to have been started manually. This is the manual option for managing the MQ and useful for running MQ as a service on a single machine to serve all PDG Deadline jobs.

Address

Specifies the IP address to use when connecting to the MQ server.

This parameter is only available when Type is set to Connect.

Task Callback Port

Sets the TCP Port used by the Message Queue Server for the XMLRPC callback API. This port must be accessible between farm blades.

This parameter is only available when Type is set to Connect.

Relay Port

Sets the TCP Port used by the Message Queue Server connection between PDG and the blade that is running the Message Queue Command. This port must be reachable on farm blades by the PDG/user machine.

This parameter is only available when Type is set to Connect.

These parameters are only available when Type is set to Farm.

Batch Name

When on, specifies a custom Deadline batch name for the MQ job. When off, the MQ job uses the job batch name.

Job Name

(Required) Specifies the name for the MQ job.

Comment

(Optional) Specifies a comment to add to the MQ job.

Department

(Optional) Specifies the default department (for example, Lighting) for the MQ job. This allows you to group jobs together and provide information to the farm operator.

Pool

Specifies the pool to use to execute the MQ job.

By default, Pool is none.

Group

Specifies the group to use to execute the MQ job.

By default, Group is none.

Priority

Sets the priority for the MQ job. The minimum priority is 0. The maximum priority comes from a setting in Deadline’s repository options (for example, this is usually 100).

By default, Priority is 50.

Machine Limit

Specifies the maximum number of Deadline Worker machines that can execute this MQ job.

By default, Machine Limit is 0 (no limit).

Machine List

Specifies a restricted list of Deadline Workers that can execute this MQ job. The kind of list that is written out is determined by the Machine List is A Blacklist parameter below.

If the Machine List is A Blacklist parameter is off, the list is written out as Whitelist in the job info file. If Machine List is A Blacklist is on, it is written out as Blacklist.

Machine List is a Blacklist

When on, the Machine List is written out as Blacklist. This means that the listed machines are not allowed to execute this MQ job. When off, only the machines in the list are allowed to execute this MQ job.

Limits

Specifies the Deadline Limits (Resource or License type) required for the scheduled MQ job. The limits are created and managed through the Deadline Monitor in Deadline.

On Job Complete

Determines what happens to with the MQ job’s information when it finishes.

By default, On Job Complete is Nothing.

For more information, see Deadline’s documentation.

RPC Server ¶

Parameters for configuring the behavior of RPC connections from out of process jobs back to a scheduler instance.

Ignore RPC Errors

Determines whether RPC errors should cause out of process jobs to fail.

Never

RPC connection errors will cause work items to fail.

When Cooking Batches

RPC connection errors are ignored for batch work items, which typically make a per-frame RPC back to PDG to report output files and communicate sub item status. This option prevents long-running simulations from being killed on the farm, if the submitter Houdini session crashes or becomes unresponsive.

Always

RPC connection errors will never cause a work item to fail. Note that if a work item can’t communicate with the scheduler, it will be unable to report output files, attributes or its cook status back to the PDG graph.

Max RPC Errors

The maximum number of RPC failures that can occur before RPC is disabled in an out of process job.

Connection Timeout

The number of seconds to wait when an out of process jobs makes an RPC connection to the main PDG graph, before assuming the connection failed.

Connection Retries

The number of times to retry a failed RPC call made by an out of process job.

Retry Backoff

When Connection Retries is greater than 0, this parameter determines how much time should be spent between consecutive retries.

Batch Poll Rate

Determines how quickly an out of process batch work item should poll the main Houdini session for dependency status updates, if the batch is configured to cook when it’s first frame of work is ready. This has no impact on other types of batch work items.

Release Job Slot When Polling

Determines whether or not the scheduler should decrement the number of active workers when a batch is polling for dependency updates.

Job Parms ¶

Job-specific parameters that affect all submitted jobs, but can be overridden on a node-by-node basis. For more information, see Scheduler Job Parms / Properties.

HFS

Specifies the rooted path to the Houdini installation on all Deadline Worker machines.

If you are using variables, they are evaluated locally unless escaped with \. For example, $HFS would be evaluated on the local machine, and then the result value would be sent to the farm.

To force evaluation on the Worker instead (like for a mixed farm set-up), use \$HFS and then set the following (for example) in Deadline’s Path Mapping

$HFS = C:/Program Files/Side Effects Software/Houdini 17.5.173.

Python

Specifies the rooted path to Python that should point to the required Python version installed on all Worker machines (for example, $HFS/bin/python).

If you are using variables, then you should map them in Deadline’s Path Mapping. For example, if you are using default values, then you would path map $HFS. And if on Windows, you would also add .exe or \$PDG_EXE (for a mixed farm setup) to the path mapping. So that mapping would look something like: $HFS/bin/python\$PDG_EXE → C:/Program Files/Side Effects Software/Houdini 17.5.173/bin/python.exe.

Hython

Determines which Houdini Python interpreter (hython) is used for your Houdini jobs. You can also specify this hython in a command using the PDG_HYTHON token.

Default

Use the default hython interpreter that is installed with Houdini.

Custom

Use the executable path specified by the Hython Executable parameter.

Hython Executable

This parameter is only available when Hython is set to Custom.

The full path to the hython executable to use for your Houdini jobs.

Pre Batch Script

When Schedule Work Items as Jobs is checked on, this parameter specifies the path to the Python script to run when the job batch starts. Note that the machine cooking the PDG graph executes the script, which is not necessarily a worker node on the Deadline farm. This differs from the Pre Job Script parameter, which guarantees that a Deadline worker node executes the script.

Post Batch Script

When Schedule Work Items as Jobs is checked on, this parameter specifies the path to the Python script to run after the job batch finishes. Note that the machine cooking the PDG graph executes the script, which is not necessarily a worker node on the Deadline farm. This differs from the Post Job Script parameter, which guarantees that a Deadline worker node executes the script.

Pre Job Script

When Schedule Work Items as Jobs is checked off, this parameter specifies the path to the Python script to run when the job starts. This parameter sets the Deadline PreJobScript job property.

Post Job Script

When Schedule Work Items as Jobs is checked off, this parameter specifies the path to the Python script to run after the job finishes. This parameter sets the Deadline PostJobScript job property.

Pre Task Script

Specifies the Python script to run before executing the task. This parameter sets the Deadline PreTaskScript task property.

Post Task Script

Specifies the Python script to run after executing the task. This parameter sets the Deadline PostTaskScript task property.

Inherit Local Environment

When on, the environment variables in the current session of PDG are copied into the task’s environment.

Houdini Max Threads

When on, sets the HOUDINI_MAXTHREADS environment to the specified value. By default, Houdini Max Threads is set to 0 (all available processors).

Positive values limit the number of threads that can be used, and those values are clamped to the number of available CPU cores. A value of 1 disables multi-threading entirely, as it limits the scheduler to only one thread.

For negative values, the value is added to the maximum number of processors to determine the threading limit. For example, a value of -1 would use all CPU cores except 1.

Unset Variables

Specifies a space-separated list of environment variables that should be unset in or removed from the scheduler’s task environment.

Environment File

Environment Variables

Additional work item environment variables can be specified here. These will be added to the job’s environment. If the value of the variable is empty, it will be removed from the job’s environment.

Name

Name of the work item environment variable.

Value

Value of the work item environment variable.

Specifies an environment file for environment variables to be added to the job’s environment. An environment variable from the file will overwrite an existing environment variable if they share identical names.

Environment Variables

Allows you to add custom environment variables to each task.

OpenCL Force GPU Rendering

For OpenCL nodes only. Sets the GPU affinity based on the current Worker’s GPU setting and user specified GPUs.

GPUs Per Task

For Redshift and OpenCL nodes. Specifies the number of GPUs to use per task. This value must be a subset of the Worker’s GPU affinity settings in Deadline.

Select GPU Devices

For Redshift and OpenCL nodes. Specifies a comma-separated list of GPU IDs to use. The GPU IDs specified here must be a subset of the Worker’s GPU affinity settings in Deadline.

| See also |