| On this page | |

| Since | 21.0 |

Overview ¶

This is a generic ML training node for creating models that generate output image from input images. For example, you can train a model to convert terrain topology sketches into height fields, or create a model that colorizes images, given existing samples of black/white images and their colored counterparts.

The node can train two different kinds of models – a paired model where there’s a pre-defined relationship between the input and output images, and an unpaired model where the inputs and outputs are unrelated. In both cases the node trains a Generative Adversarial Network (GAN) that attempts to learn the relationship between the images.

A GAN consists of two neural networks: the __generator and disriminator. The generator learns how to produce output images from input images. The discriminator, learns to distinguish a real output from a fake one created by the generator. As the model trains, the generator is optimized to do a better job of tricking the discriminator into accepting a generated output image as real. Conversely, the discriminator is optimized for accurately determining that a generated output is fake. Over many training, iterations both the generator and discriminator improve at their respective tasks. After the training process is finished, the final version of the generator function is the trained model.

The generator network created by this node is a convolutional neural network called a U-Net. The parameters of the U-Net, such as the number of layers and the convolution kernel size, can be configured using parameters on the node. The discriminator network is a single chain of convolution layers, which can also be configured using node parameters.

The node can be configured to write out an ONNX model file during the training

process, which can be used with either the ONXX Interface SOP

or the

ONNX Inference COP to apply to the model to data in

Houdini.

During the training process, it can be useful to periodically test the model to assess if the training process is working properly. This can be done by providing a second, smaller, test data set that consists of images that aren’t included in the original training data set. When testing the model, the node records the error between the correct test output and the generated output produced by running the model on the test inputs.

Paired Training ¶

Paired training involves providing a set of sample input images and their corresponding outputs. The image samples provide the training process with known, correct images as the basis for the training process. The training samples can be specified as two separate directories of images or as a directory of composite images with the input and output side-by-side in the same image. When specifying the input/output training images as two separate directories, the node expects the names and order of the files in both directories is the same.

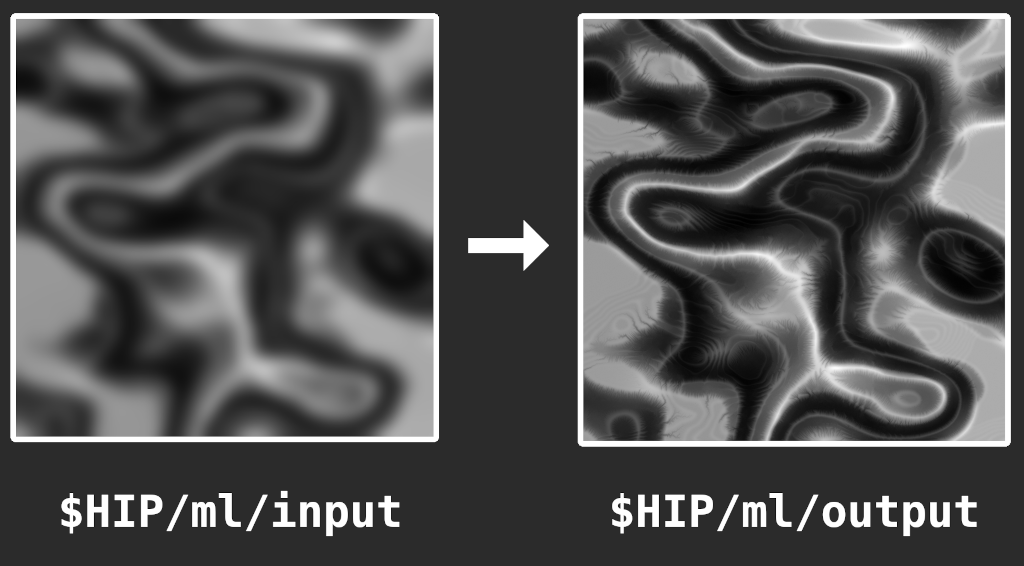

An example of a pair of training samples images, used to train a model that converts terrain outlines into height fields:

“”“An example of a pair of training samples to train a model that

converts terrain outlines into height fields”“”

“”“An example of a pair of training samples to train a model that

converts terrain outlines into height fields”“”

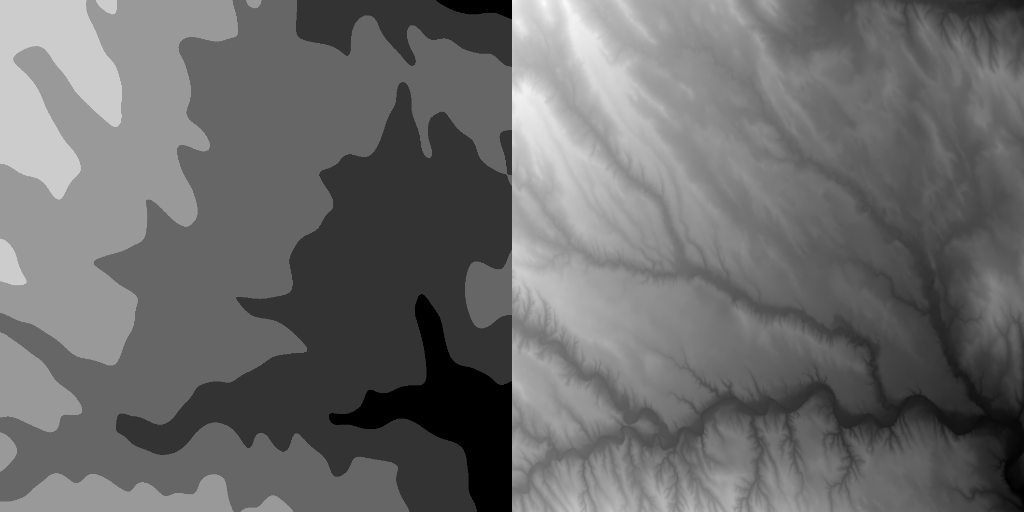

“”“An alternative example of a composite image training sample for training the

terrain generation model, where the entire training sample is saved a single

image. The left half of the image is the input, the right half is the output.”“”

“”“An alternative example of a composite image training sample for training the

terrain generation model, where the entire training sample is saved a single

image. The left half of the image is the input, the right half is the output.”“”

Unpaired Training ¶

Unpaired training follows the same general principles as paired training. However, it doesn’t have the requirement of an existing known relationship between input and output images. Instead of training a single generator, the node trains a network that maps input → output and a second inverse network that maps output → input. Unpaired training has less constraints and relies on the model’s ability to find transferable structure between the two data sets, which means the quality and consistency of the trained output is typically lower than paired training.

Unpaired training can only be used with input images that are specified in two separate directories.

Path Variables ¶

The node supports writing various files to disk during the training process, such as model checkpoints or ONNX files for inference in SOPs/COPs. The file paths are specified using parameters, which can include variables that are filled in just-in-time when the file path is used:

network

The name of the network component such asgenerator or discriminator.

index

The network component number. For paired training the model has only

one generator and discriminator component, so the index will always be

0. For unpaired training there are two distinct generator and

discriminator components, so the index will be either 0 or 1.

iteration

The training iteration number as an integer.

For example, you can set the ONNX Path parameter to $HIP/ml/models/model.{iteration}.onnx

to create an ONNX file with the iteration number in its file path name.

Parameters ¶

Data Set ¶

These parameters define the data set to the train the model. The data set should consist of one or more pairs of images that define sample inputs and outputs from the model. For the Unpaired Images input type, the images are effectively inputs without any sample outputs.

Input Type

Determines how input training images should be specified.

Single Paired Image:

Multiple Paired Images

Trains the model from multiple input/reference training samples, specified in two directories.

Multiple Unpaired Images

Trains the model using uncorrelated images without a clearly defined relationship. This is usually slower to train and produces less stable results. It also uses a different neural network architecture that paired training. The input images are specified in two directories.

Composite Paired Images

Trains the model from multiple input/reference training samples expressed as composite image pairs in a single directory.

Input Image

When Input Type is set to Single Paired Image, this file path

determines the path to the sample input image used for training.

Reference Image

When Input Type is set to Single Paired Image, this file path

determines the path to the sample reference or output image used for

training.

Input Image Dir

When Input Type is set to Multiple Paired Images or Multiple Unpaired Images,

this parameter determines the path to the directory of sample input images

used for training.

Reference Image Dir

When Input Type is set to Multiple Paired Images or Multiple Unpaired Images,

this parameter determines the path to the directory of sample reference or

output images used for training.

Composite Image Dir

When Input Type is set to Composite Paired Images, this parameter

determines the path to the directory of composite images that define an

input/reference training pair.

Channel Count

The number of color channels in the input and reference images. This also defines the number of channels in results produced by the model. If any input or reference images have too few channels, their last channel is copied to fill the missing channels. Likewise, any channels beyond the channel count are discarded.

Image Size

The width and height of the input and reference images. Also the size of the images produced by the model. Currently the model can only be trained on square images.

Input Data Set Storage

Specifies how the input data set should be stored in memory.

Automatic

PyTorch will manage the storage and transfer of the data set between the CPU and the device used for training.

Keep on Training Device

Makes sure that the training data set is kept on the device selected for training.

Worker Threads

Specifies the number of background worker threads to use when loading input

images. Using more threads can improve performance for large data sets. A

value of 0 indicates the images should be loaded on the main training

thread instead of in the background.

Input Batch Size

The number of training samples to load for each training batch. The model weights are updated after every batch, so training with a larger batch size can speed up training, but also requires more memory.

These parameters can further randomize the input images, adding more variations that may help produce a higher quality model. For paired images, the same transformations are applied to both the input and reference images in a training sample.

Limit Input Count

When on, artificially limits the number of training

samples the model uses. For example, if the input image directory has 1,000

images and this parameter is set to 500, the training process will

only use the first 500 images.

Crop Inputs

When on, training samples are randomly cropped and

resized back to the Image Size before being used in the training

process. The value determines how much cropping should

occur. For example, a value of 0.5 means that samples will be cropped to a

random size between their original dimensions and half their original

dimensions.

Horizontal Flip

When on, training samples are randomly flipped on their horizontal axis. The value is the probability that a given training sample is flipped.

Vertical Flip

When on, training samples are randomly flipped on their vertical axis. The value is the probability that a given training sample is flipped.

Rotation

When on, training samples are randomly rotated in both the clockwise and counterclockwise directions. Images are re-scaled so they fill the target Image Size after being rotated. This parameter specifies the probability of a random rotation occurring.

Shuffle Input Order

When on, training samples are randomly shuffled each training iteration.

Training ¶

These parameters control the basic behavior of model training, such as the rate the model learns and the total number of iterations spent training.

Maximum Iterations

The maximum number of training iterations or epochs.

Learning Rate

The rate the model updates itself during the training process. A higher value can cause the model to train faster, but may also result in instability that causes the model a failure to converge.

Rate Scheduler

Determines the scheduler type, which varies the learning rate over the training process.

Cosine Annealing

Uses a cosine annealing schedule, as described in the pytorch documentation

Linear

Maintains a constant learning rate as defined in the Learning Rate

parameter, and then linearly decays it towards a value of 0 near the end

of the training process. The Linear Decay parameter determines the

number of training iterations to reduce the learning

rate value to 0.

Step

Maintains a constant learning rate which is multiple by a factor of

Gamma after every Step training iterations.

Linear Decay

When Rate Scheduler is set to Linear, determines how

many iterations should be spent decaying the learning rate toward 0. For

example, if set to 50 then learning rate will decay

towards zero for the last 50 training iterations.

Step Size

When Rate Scheduler is set to Step, determines the

number of training iterations between updates to the learning rate.

Gamma

When Rate Scheduler is set to Step, determines the

scale factor applied to learning rate each time a scheduler step is reached.

Initializer Type

Determines which type of weight initializer when setting initial model weights.

Normal

Initializes the model weights with normally distributed random values.

Orthogonal

Initializes the model weights to an orthogonal matrix.

Gain

The scale factor applied to randomly initialized model weights.

These parameters determine which optimizes the model should use to update itself each training iteration.

Algorithm

Determines which optimization algorithm to use during the training process.

Adam

Uses the Adam algorithm, see pytorch documentation for more details.

Adadelta

Uses the ADADELTA algorithm, see pytorch documentation for more details.

SGD

Uses the Stochastic Gradient Descent algorithm, see pytorch documentation for more details.

ASGD

Uses the Averaged Stochastic Gradient Descent algorithm, see pytorch documentation for more details.

Beta

When Algorithm is set to Adam, determines the beta

values used by the optimization algorithm. Higher beta values can result in

faster model convergence, but may also decrease stability.

Rho

When Algorithm is set to Adadelta, determines the rho

value used by the optimization algorithm. A higher value results in a faster

rate of training but maybe also decrease stability.

Momentum

When Algorithm is set to SGD, determines the

momentum value. This value is optional, but helps the training process

converge faster by allowing past gradient calculations to influence the

optimization process. The momentum value determines how heavily past

results influence the optimization process.

These parameters define the topology of the Generator network in the underlying GAN model used by this node. The Generator network is a U-Net, which down-samples and then up-samples input images, with skip connections at each layer.

Layers

The number of down-sample and up-sample layers in the generator.

This parameter is directly influenced by the Image Size parameter. For

example, if the image size is set to 256 and this parameter is set to 8,

the generator will have 8 layers with the sizes: 64, 128, 256, 256, 256, 256, 256, 256.

Initial Layer Size

The size of the first generator layer. The size of subsequent layers is determined based on this value, as described in the Layer parameter.

Kernel Size

The size of the convolution kernels used in the generator.

L1 Lambda

The scale factor applied to the error calculation when training the generator network.

For paired images, the error value is a measure of the difference between a reference image and an image generated by the model using the same training input.

For unpaired images, the error value is a measure of the difference between a reference image and an image generated by applying the output of one generator back into the second generator.

Down Activation

The activation function to use for down-sampling layers.

Up Activation

The activation function to use for up-sampling layers.

These parameters define the topology of the Discriminator network in the underlying GAN model.

Layers

The number of layers in the discriminator.

Initial Layer Size

The size of the first discriminator layer. The size of subsequent layers is determined based on this value, as described in the Layer parameter.

Kernel Size

The size of the convolution kernels used in the discriminator.

Activation

The activation function to use for discriminator layers.

Test Model

When on, the trained model is tested periodically against a separate data set.

Test Target SSIM

When Testing is on, this parameter can stop training early when a target SSIM score is reached. An average SSIM score for the test results is computed by comparing them to the expected outputs. Once it crosses the value specified in this parameter the training stops.

These parameters determine the data set used to test the model. This data set should consist of images that do NOT already appear in the training data set.

Test Output Directory

Determines the directory test results are written. Test outputs will include both the original input images and the images generated by the model.

Test Input Type

Determines how test data set images should be specified.

Single Paired Image

Tests the model from a single input/reference sample.

Multiple Paired Images

Tests the model from multiple input/reference samples, specified in two directories.

Multiple Unpaired Images

Tests the model using uncorrelated images without a clearly defined relationship. The tests images are specified in two directories.

Composite Paired Images

Tests the model from multiple input/reference samples expressed as composite image pairs in a single directory.

Input Image

When Test Input Type is set to Single Paired Image,

determines the path to the sample input image used for testing.

Reference Image

When Test Input Type is set to Single Paired Image,

determines the path to the sample reference or output image used for

testing.

Input Image Dir

When Test Input Type is set to Multiple Paired Images or Multiple Unpaired Images,

determines the path to the directory of sample images used for testing.

Reference Image Dir

When Test Input Type is set to Multiple Paired Images or Multiple Unpaired Images,

determines the path to the directory of sample reference or

output images used for testing.

Composite Image Dir

When Test Input Type is set to Composite Paired Images, determines the path to the directory of composite images that define an

input/reference testing pair.

Test Data Set Storage

Specifies how the input data set should be stored in memory.

Automatic

Keep on Training Device

Worker Threads

Specifies the number of background worker threads to use when loading input

images. Using more threads can improve performance for large data sets. A

value of 0 indicates the images should be loaded on the main training

thread instead of in the background.

Test Batch Size

The number of test samples to load for each test iteration.

These parameters determine the frequency and size of the model testing step.

Test Frequency

The rate the model is tested, expressed as the number of iterations between test runs.

For example, a value of 1 means the model is tested after

each training iteration. A value of 3 will run model testing

every 3 iterations.

Test Size

The number of test data set images to use when testing the model. These images are randomly chosen from the test data set specified above.

Add Test Results as Outputs

When on, images written out during the testing process are added as work item outputs.

Checkpointing ¶

Model Path

When on, the raw PyTorch models for each network component are written to disk using the specified path format string. The format string can use the variables described in the overview section.

Model Save Rate

When Model Path is on, determines the rate the PyTorch model files are written to disk. The rate is expressed as the number of training iterations between writing model checkpoints. Checkpoints are always written on the final training iteration, even when not a multiple of the parameter value.

Add Model Checkpoints as Outputs

When on, model checkpoints written out during the training process are added as work item outputs.

ONNX Path

When on, the generator component(s) of the model are converted to ONNX and written to disk using the specified format string. The format string can use the variables described in the overview section.

ONNX Save Rate

When ONNX Path is on, determines the rate the ONNX model files are written to disk. The rate is expressed as the number of training iterations between writing model checkpoints. Checkpoints are always written on the final training iteration even when not a multiple of the parameter value.

ONNX Version

Specifies the opset version when writing ONNX files.

Export Dynamic Axes

When on, the input image dimensions are exported as dynamic axes instead of fixed to the size of the training images. This makes it possible to use the exported ONNX model with inputs that aren’t the same size as the training set. However, the quality of the model outputs may be lower.

Add ONNX Checkpoints as Outputs

When on, ONNX model checkpoints written during the training process are added as work item outputs.

Plot Type

The plot file format to create, if any.

Disabled

Plotting is disabled.

CSV

Plots are written out as raw CSV files.

Image

Plots are written out as images using the matplotlib library.

Plot Rate

The rate the training plots are written, expressed as a number of iterations.

SSIM Plot

A plot of the SSIM metric recorded during training and testing. This is a rough estimate of the quality of the generated outputs produced by the model in both the training and testing process based on the reference images in the corresponding data sets. If model testing is off, the plot will only including SSIM scores for the training data set.

Loss Plot

A plot of the loss/error values produced when training the model. Generally speaking, if the model is improving over the training process the loss values in the plot should trend towards 0.

Score Plot

A plot of if the discriminator score(s) produced while training the model. These values represent how well the discriminator component of the network is able to distinguish between generated and real images.

Override Device

When on, override the device that PyTorch uses to train the model.

Set CPU Threads

When the device type is set to “cpu”, this parameter can be used to set the maximum number of CPU threads used during training. It has no effect on the training process when using a GPU device.

Environment Path

The path to the virtual environment on disk.

Python Bin

Determines which Python executable to use when creating the virtual

environment and installing packages. Also the version of Python

that will be associated with the venv.

Custom Python Bin

When Python Bin is set to Custom, determines the path

to the Python interpreter to use when creating the virtual environment.

Use Symlinks

When on, the virtual environment is created with symlinks to the source Python binaries if possible. This is recommened when creating a virtual environment using Houdini’s Python interpreter.

Use Pip Cache

When enabled, pip will attempt to use cached packages on the local system instead of downloading them every time. This can improve the installation times when repeatedly installing the same Python package in different virtual environments.

TOP Scheduler Override

This parameter overrides the TOP scheduler for this node.

Schedule When

When enabled, this parameter can be used to specify an expression that determines which work items from the node should be scheduled. If the expression returns zero for a given work item, that work item will immediately be marked as cooked instead of being queued with a scheduler. If the expression returns a non-zero value, the work item is scheduled normally.

Work Item Label

Determines how the node should label its work items. This parameter allows you to assign non-unique label strings to your work items which are then used to identify the work items in the attribute panel, task bar, and scheduler job names.

Use Default Label

The work items in this node will use the default label from the TOP network, or have no label if the default is unset.

Inherit From Upstream Item

The work items inherit their labels from their parent work items.

Custom Expression

The work item label is set to the Label Expression custom expression which is evaluated for each item.

Node Defines Label

The work item label is defined in the node’s internal logic.

Label Expression

When on, this parameter specifies a custom label for work items created by this node. The parameter can be an expression that includes references to work item attributes or built-in properties. For example, $OS: @pdg_frame will set the label of each work item based on its frame value.

Work Item Priority

This parameter determines how the current scheduler prioritizes the work items in this node.

Inherit From Upstream Item

The work items inherit their priority from their parent items. If a work item has no parent, its priority is set to 0.

Custom Expression

The work item priority is set to the value of Priority Expression.

Node Defines Priority

The work item priority is set based on the node’s own internal priority calculations.

This option is only available on the

Python Processor TOP,

ROP Fetch TOP, and ROP Output TOP nodes. These nodes define their own prioritization schemes that are implemented in their node logic.

Priority Expression

This parameter specifies an expression for work item priority. The expression is evaluated for each work item in the node.

This parameter is only available when Work Item Priority is set to Custom Expression.

| See also |